2. Launching applications with PopRun

In order to understand how PopRun works, it is to important consider the following:

The application to be distributed using the PopDist library

Input data required by the application

Output data generated by the application

Virtual-IPU (V-IPU) utility for allocating IPUs on Pod systems

This guide assumes that you are familiar with the concepts mentioned above. More information on V-IPU can be found in the V-IPU User Guide.

The typical workflow of launching a distributed application on a Pod system involves the following steps:

Login: All Pod systems are equipped with at least one host server. All application launches are made from a host server. Thus, the starting point for any application launch is logging on to a host server.

Distribution: The actual application to be launched must be implemented in such a way so that it is capable of taking advantage of multiple IPUs, either within a single IPU-Machine, like an IPU-M2000 or a Bow-2000, or across multiple IPU-Machines as in a Pod system. The PopDist API is used to make applications distributed. Typically, this involves dividing the computation in such a way so that it can be executed in parallel. The IPUs involved in the computation can either be located within the same Pod system or in another interconnected Pod system.

Launch: The actual application launch is made by calling PopRun from the command line on a host server.

Allocation: Depending on the resources available to you, including both IPUs and host servers, PopRun will automatically interface with V-IPU to allocate and reserve the resources you need to execute your application.

Execution: the final step involves the actual execution, where the application runs on the IPUs.

Note

Some IPU applications may involve the host as part of the computation or for host-side collective operations.

2.1. Launch modes

Applications distributed with PopDist can be launched in several ways. Below are the most common PopRun launch modes:

Single instance: The simplest launch mode. Here, a single instance is launched on the host server to run an application on a single or multiple IPU-Machine(s) located in one Pod system. Each instance runs on a single graph compile domain (GCD), which is a subset of the available IPUs connected by IPU-Links (an IPU-Link domain)

Multi-instance/Single host: In this launch mode, multiple instances are launched on the same host server. Typically, the application targets multiple IPU-Machines. This mode is recommended for applications where the host CPU is used for pre-processing or other I/O tasks. Each instance can run on a separate GCD.

Multi-instance/Multiple hosts: This mode applies to Pod systems that are equipped with multiple host servers. In this mode, multiple instances are launched on the multiple host servers located within a single Pod. This mode is recommended, for example, for applications with special pre- or post-processing needs that must take place on the host CPU.

Multi-instance/Multiple Pods: The most extensive mode where multiple instances are launched on one or more host servers across multiple Pods connected via GW-Links (known as a graph scaleout domain). This mode is recommended for highly scalable IPU applications. Each Pod contains one or more IPU-Link domains (sets of IPUs connected via IPU-Links) The number of IPU-Link domains is specified with the

--num-ildsoption.

2.2. Multi-host setup

Launching applications on multiple hosts requires the host servers be set up to enable that they can communicate with each other. PopRun uses the SSH network communication protocol (Section 2.2.1, SSH setup), specific configuration of network interfaces (Section 2.2.3, Network interfaces) and a shared file system (Section 2.2.4, File system setup).

Note

It is possible to set up multiple hosts without a using a shared file system, but this is not described in this document.

2.2.1. SSH setup

SSH is a secure network communication protocol that uses key-based authentication. We recommend using OpenSSH. This section describes how to install OpenSSH, create an SSH key pair and copy the public key to the other host servers. For more information about SSH, refer to the SSH website.

Note

Use ifconfig to find the IP addresses of the host servers. You will need these IP addresses for the SSH setup.

Install and configure OpenSSH on all hosts

OpenSSH must be installed on each host. You can check if OpenSSH is already installed with:

$ ssh -V

which should output the installed version.

If OpenSSH is not installed, then run the following to install an SSH server and client:

$ sudo apt-get install openssh-server $ sudo apt-get install openssh-client

Confirm the installation with:

$ ssh -V

Edit the SSH configuration file /etc/ssh/sshd_config and add the following lines:

PubkeyAuthentication yes

Note

It is your responsibility to ensure that the SSH configuration meets your company’s security policies.

Restart the ssh service on all nodes:

$ sudo service ssh restart

Note

You will need the server admin to do this.

Create SSH key pair

Note

Choose one of the hosts to be the parent host. The remaining hosts will be referred to as child hosts. This distinction makes it easier for container-based multi-host launches and is not required if containers are not used.

On each host, create the .ssh directory in the $HOME directory and set the permissions on that directory:

mkdir -p ~/.ssh chmod 700 ~/.ssh

Use the ssh-keygen tool to generate a new key pair.

ssh-keygen -t [key_alg]

where [key_alg] is the public key algorithm used to generate the key pair. The recommended options are rsa and ed25519. Follow the on-screen instructions to complete the process.

Note

Give your key a name that identifies it as belonging to a specific host, for example id_rsa_parent or id_rsa_child-1.

Verify SSH setup

In order to verify that you have successfully copied the SSH public keys to all hosts, you can try to ssh into each of them. If you get access without being

prompted for a password, you are ready to start using PopRun with that host.

Note

For each attempt to connect, you may be warned that the authenticity of the host cannot be confirmed (if you have not connected to it before) and if you want to continue to connect to it. You should confirm this by entering yes.

For example, from the parent host, try to connect to each child host:

ssh username@ip_of_child

Similarly, from each child host, try to connect to the parent host:

ssh username@ip_of_parent

Should you encounter problems accessing a host when using PopRun, an error will be reported. There are two typical errors that you could encounter:

Host key verification failed: This indicates that the key of the remote host was not accepted by the local host. The key of the host typically needs to be placed in

~/.ssh/known_hoststo be automatically verified. Note that when usingsshin interactive mode, you will be asked if you want to add the remote key the first time you connect. However, when using PopRun, this is not the case as PopRun usessshin non-interactive mode. So the easiest way to get the remote host key added is typically to usesshto log into it the first time.Permission denied: This indicates that the remote host did not grant access to the local host. The key of the local host typically needs to be placed in

~/.ssh/authorized_keyson the remote host in order to be automatically accepted. This can be resolved by ensuring that the SSH public keys have been copied to the remote host and added to the~/.ssh/authorized_keysfile as in Authorise key pair on all hosts. Note that interactive password authentication is not supported by PopRun.

2.2.2. PopRun SSH distribution tool

PopRun comes with a tool for SSH key distribution. This is accessed by setting the

distribute-ssh-key parameter when calling poprun. When this parameter is set, PopRun

will attempt to distribute the key to all hosts specified by the -H or -hosts

flag using ssh-copy-id. Thus, PopRun will prompt the user only once for their password on these hosts.

If your key is located somewhere else than $HOME/.ssh, you can also use distribute-ssh-key-path to

specify the folder.

If you set distribute-ssh-key-type PopRun will attempt to generate a key for you of the

specified type. The following key types are supported: dsa, ecdsa, ed25519, and rsa.

To specify the key type, use the distribute-ssh-key-type together with the previously mentioned key types.

2.2.3. Network interfaces

If you run into networking issues, you can try to filter the network interfaces from the control plane or the data plane. The control plane in a network controls how data is forwarded while the data plane handles the actual forwarding. The control plane can operate on both the regular network interface (NIC) or the RDMA Network Interface (RNIC). But, the data plane only operates through the RNIC, so it needs the RDMA subnet.

Pass the following parameters to --mpi-global-args, which sets the global options to be passed to mpirun:

to include or exclude (respectively) a specific network interface from the control plane:

--mca oob_tcp_if_include,--mca oob_tcp_if_exclude.to to include or exclude (respectively) a specific network interface from the data plane:,

--mca btl_tcp_if_include,--mca btl_tcp_if_exclude.

Refer to the OpenMPI documentation for more information on how to use the following parameters: --mca oob_tcp_if_include, --mca oob_tcp_if_exclude, --mca btl_tcp_if_include and --mca btl_tcp_if_exclude.

Note that PopRun may struggle with network interfaces that have multiple IP addresses configured (see the OpenMPI documentation).

2.2.4. File system setup

The same file system structure is necessary on all the hosts. In particular, the same versions of the Poplar SDK, application source code, and the dataset used for training should all be at the same location in the file system on all the hosts.

The easiest way to meet these requirements is by using a shared file system. If that is not available, you need to ensure that all the hosts are in sync by performing the necessary copying.

2.3. Caching compiled executables

Poplar compiles user applications, and generates an executable that can be executed on IPUs. This executable can also be stored on the host, and loaded at a later time. Loading an existing executable reduces the launch time, because the computational graph will not be compiled by Poplar.

Executable caching can be useful in multiple cases, such as:

Running the same application multiple times

Running an application by distributing it to multiple instances

Running an application by distributing it to multiple hosts, and possibly further distributing it across multiple instances on each host

Executable caching reduces the time required to compile large models. Therefore, it is a recommended feature, and PopRun will try to enable it automatically. However, you can still disable executable caching. See the examples in the following sections to understand how executable caching can be disabled, as well as how it can be used.

Note

Framework-specific executable cache settings made through environment

variables such as POPTORCH_CACHE_DIR conflict with the PopRun command

line option --executable-cache-path. Therefore, they must not be

specified at the same time.

2.4. Application launches

In this section we will explain how sample IPU applications can be

launched using PopRun using the various launch modes shown in

section on Section 2.1, Launch modes. For simplicity, we will assume that our

application is a Python application called train.py. The program

arguments are also not shown, as they are irrelevant. Moreover, the

argument to the --vipu-server-host is fictional and used for the sake of the

example. Replace it with the host name or the IP address of your own V-IPU

server.

See Section 2.8, V-IPU settings to understand how you can use adjust PopRun’s V-IPU settings.

2.4.1. Single instance

$ poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=4 --num-instances=1 python3 train.py

Executable caching is disabled by default for single-instance single-host runs.

To enable executable caching, specify

--executable-cache-path=<directory name>. The executable will be built when

the application runs for the first time, and it can be reused by the future

runs.

$ poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=4 --num-instances=1 \

--executable-cache-path=/tmp/poplar-executable-cache \

python3 train.py

Note

Using --num-instances=1 and --num-replicas=1 together is not supported

and might give additional overhead and/or raise other issues.

2.4.2. Multi instance / single host

$ poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=8 --num-instances=2 python3 train.py

When a single-host, multi-instance application is launched, PopRun will

automatically create an executable cache directory that will be used by all

instances to load the executable. One of the instances will build the

executable, and store it in this directory while the other instances will load

this executable. Any of the instances can build the executable; it is not

defined which it will be. PopRun will remove the directory when the program

exits either normally or abnormally. Abnormal terminations include any non-zero

exit code, and POSIX signals such as SIGKILL, and SIGABRT.

You can disable executable caching by specifying an empty string to the

--executable-cache-path command line option. For example:

$ poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=8 --num-instances=2 \

--executable-cache-path="" \

python3 train.py

This will disable executable caching, and each instance will compile the graph on its own.

2.4.3. Multi instance / multi host

$ poprun --host 10.3.7.153,10.3.7.154 \

--vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=8 --num-instances=2 python3 train.py

The key takeaway from the command line shown above is that the hosts involved are passed to PopRun. You can use either the host names or IP addresses for this.

Note that PopRun will unset the IPUOF_CONFIG_PATH environment variable on

all the instances, including the ones on remote hosts, before launching the

application.

When PopRun launches a multi-host application, it will automatically create

unique executable cache directories on each host. PopRun will remove these

directories when the application exits, whether normally or abnormally. Abnormal

terminations include any non-zero exit code, and POSIX signals such as

SIGKILL, and SIGABRT.

If the host needs to cache the executable for more than one execution (that is,

running the same model multiple times), then you must specify

--executable-cache-path to use a fixed location. You are then

responsible for deleting the cached executables when they are no longer

required.

$ poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=4 --num-instances=1 \

--executable-cache-path=/tmp/poplar-executable-cache \

python3 train.py

You can disable executable caching by specifying an empty string to

the --executable-cache-path command line option. For example:

$ poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=4 --num-instances=1 \

--executable-cache-path="" \

python3 train.py

This will disable executable caching, and each instance on each host will compile the graph on its own.

2.5. Process placement and non-uniform memory access (NUMA)

Process placement is a mechanism that controls where instances are executed on the available hardware resources. This is useful since in a non-uniform memory access (NUMA) system, the time to access memory depends on the memory location relative to the CPU core it is accessed from. In addition, different placement strategies result in different trade-offs between memory bandwidth and inter-process communication/synchronization performance. Note that tuning process placement is a highly application and hardware-specific task.

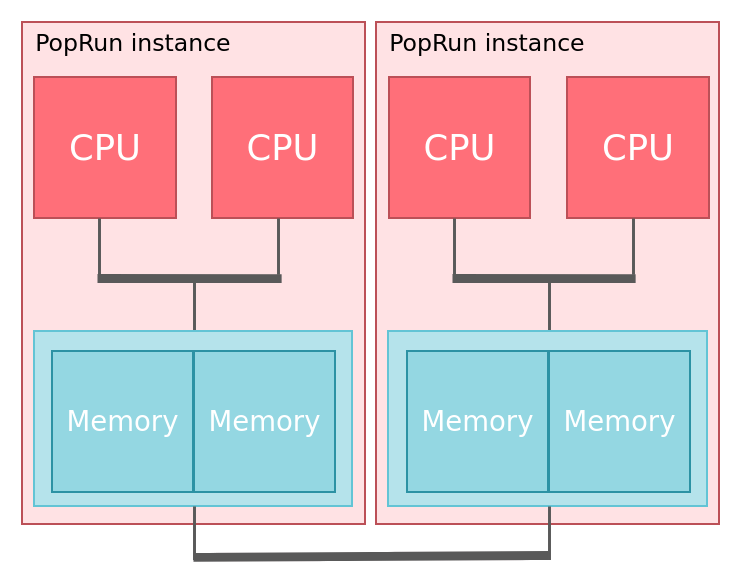

Fig. 2.1 NUMA-aware PopRun with two instances on a system with two NUMA nodes, each consisting of two CPUs and two memory slots. Each instance is bound to the CPUs and memory of its assigned NUMA node to ensure memory access locality.

PopRun exposes this control with the --process-placement flag and provides

multiple pre-defined strategies. By default (and with --process-placement spreadnuma),

PopRun is designed to be NUMA-aware. On each host, all the available NUMA nodes

are divided among the instances. This means that each instance is bound to execute

on and allocate memory from its assigned NUMA nodes, ensuring memory access

locality. This strategy maximises memory bandwidth and is likely to yield optimal

performance for most of the data loading workloads in machine learning. Note that

while an instance prefers to allocate memory from its associated NUMA node, it

may allocate from other nodes if the associated memory is oversubscribed. This

might be necessary in cases of asymmetric memory consumption, for example when

only one of the instances performs model compilation.

Other strategies provided by PopRun are --process-placement scattersocket,

--process-placement scatternuma and --process-placement close.

The scatter-based strategies force occupied cores to be scattered apart as much as

possible, either among available sockets or NUMA nodes. This approach maximises

memory bandwidth and makes sure each instance is bound to a specific core which

works well for a certain kind of applications.

The close strategy binds each instance to a specific core (similarly to the scatter

strategies above) but places each instance on a consecutive core. This approach works well

for applications with a lot of communication and/or synchronization. It is important

to note that it might result in underutilisation (or imbalance) of CPU cores

if the number of instances is lower than the number of cores (or not divisible by them).

All strategies with the exception of the spreadnuma strategy bind each instance to a

specific core and thus should not be used with applications that spawn multiple sub-threads

or sub-processes as all children will compete for the same core.

If you need more fine-grained control over process placement and binding, PopRun

allows for --process-placement disabled. In this mode, PopRun will not try to set

any of the process placement settings and will respect user specified MPI flags --map-by

and --bind-to passed in via the --mpi-global-args.

2.6. Offline compilation

PopRun provides a mechanism that improves offline compilation in scenarios where IPU devices

are not available in a system. In addition to using the --offline-mode=yes, you can specify

--offline-target=<target> to instruct PopRun to set the correct POPLAR_TARGET_OPTIONS

based on the selected <target>. Use poprun --help to see all of the available

options.

Note

Using the offline mode makes sure PopRun itself does not try to attach to any devices but does not prevent the application run by PopRun from attaching to the IPUs.

Example command that compiles a model and stores the executable in the my_cache directory:

$ poprun --num-instances=4 --num-replicas=8 --ipus-per-replica=2 \

--offline-mode=yes --offline-target=pod16 --executable-cache-path=my_cache \

python3 compile.py

Example command for running the compiled executable that was stored in the my-cache directory:

$ poprun --num-instances=4 --num-replicas=8 --ipus-per-replica=2 \

--vipu-partition=my_partition --executable-cache-path=my_cache \

python3 train.py

2.7. Using with PopVision Graph Analyser

When profiling a Poplar program using PopRun, it is often enough to specify

the --autoreport-dir command line option. This option will set the

profiler’s output directory, as well as the necessary environment variables that

enable profiling, if they aren’t already turned on.

2.7.1. POPLAR_ENGINE_OPTIONS and PopVision Graph Analyser

The POPLAR_ENGINE_OPTIONS environment variable can be used to enable or

disable profiling, set the directory name for profiling output, as well as tune

various profiling settings. See Capturing IPU reports

in the PopVision Graph Analyser User Guide

to see the available options and how to start profiling your code.

PopRun will try to adjust profiling settings based on your launch configuration. You can still tune the profiling settings if you want. However, the default settings will work in most cases.

Note

PopRun’s --autoreport-dir cannot be used when

POPLAR_ENGINE_OPTIONS have autoReport.directory set because PopDist

will set a profile directory for each instance.

2.7.2. Launching without POPLAR_ENGINE_OPTIONS

If the POPLAR_ENGINE_OPTIONS environment variable isn’t set, and you launch

the program for profiling with --autoreport-dir /profile/directory, then

PopRun will set the POPDIST_AUTOREPORT_DIRECTORY environment variable to the

directory name specified with --autoreport-dir on all instances. The

profiler will use this directory name as the parent directory to write the

output for each instance. That is, the directory name specified to

--autoreport-dir will contain subdirectories for each instance.

The value of --autoreport-dir is passed to PopDist through the

POPDIST_AUTOREPORT_DIRECTORY environment variable. PopDist will then set the

autoReport.directory, and autoReport.all settings automatically. PopDist

also sets autoReport.directory in poplar::OptionFlags when its

popdist::setEngineOptions function is called from a Poplar program.

$ poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=4 --num-instances=4 \

--autoreport-dir /tmp/training-profile-directory \

python3 train.py

$ ls -1 /tmp/training-profile-directory

0

1

2

3

Note

PopRun sets the autoReport.directory name when

--autoreport-dir is specified. Whether there is single instance or

multiple instances doesn’t matter. In the single-instance case, only

<directory name>/0 will be used.

2.7.3. Launching with POPLAR_ENGINE_OPTIONS

Specifying autoReport.directory in POPLAR_ENGINE_OPTIONS conflicts with

PopRun’s --autoreport-dir command line option. This is because PopDist will

modify the autoReport.directory setting for each instance so that the

directory name specified in PopRun’s --autoreport-dir contains

subdirectories for each instance. Since PopRun passes the

POPLAR_ENGINE_OPTIONS environment variable to all instances automatically,

it would impossible to distinguish individual instances’ profiling data.

The POPLAR_ENGINE_OPTIONS environment variable will be preserved and passed

to all instances. If the POPLAR_ENGINE_OPTIONS environment variable doesn’t

contain any profiling-related value, then PopDist will enable the

autoReport.all setting automatically.

$ POPLAR_ENGINE_OPTIONS='{"allowOutOfMemory":"true"}' \

poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=4 --num-instances=4 \

--autoreport-dir /tmp/training-profile-directory \

python3 train.py

$ ls -1 /tmp/training-profile-directory

0

1

2

3

Note

PopRun sets the autoReport.directory name when --autoreport-dir is

specified. Whether there is single instance or multiple instances doesn’t

matter. In the single-instance case, only <directory name>/0 will be used.

You can still run the program with --autoreport-dir specified, but without

profiling.

$ POPLAR_ENGINE_OPTIONS='{"autoReport.all":"false"}' \

poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=4 --num-instances=4 \

--autoreport-dir /tmp/training-profile-directory \

python3 train.py

$ ls -1 /tmp/training-profile-directory

ls: cannot access '/tmp/training-profile-directory': No such file or directory

Specifying the autoReport.directory in the POPLAR_ENGINE_OPTIONS

environment variable through --mpi-local-args and

--instance-mpi-local-args also conflicts with the --autoreport-dir

command line option.

$ poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=4 --num-instances=4 \

--autoreport-dir /tmp/training-profile-directory \

--mpi-local-args '-x POPLAR_ENGINE_OPTIONS='{"autoReport.directory":"/tmp/training/profile-directory"}'' \

python3 train.py

POPRUN ERROR: autoreport-dir command line argument cannot be specified when

POPLAR_ENGINE_OPTIONS contains autoReport.directory setting.

$ poprun --vipu-partition=my_partition --mpi-global-args="--tag-output" \

--num-replicas=4 --num-instances=4 \

--autoreport-dir /tmp/training-profile-directory \

--instance-mpi-local-args '0:-x POPLAR_ENGINE_OPTIONS='{"autoReport.directory":"/tmp/training-profile-directory/0"}'' \

python3 train.py

POPRUN ERROR: autoreport-dir command line argument cannot be specified when

POPLAR_ENGINE_OPTIONS contains autoReport.directory setting.

2.8. V-IPU settings

PopRun offers the following command line options to adjust V-IPU related settings. Using the correct V-IPU server, and a partition that is suitable for the application can only be achieved by adjusting V-IPU settings correctly.

2.8.1. V-IPU server address

PopRun accepts the --vipu-server-host command line option to specify the host

name or the IP address and the --vipu-server-port option to specify the port number of the V-IPU server.

If you don’t specify --vipu-server-host, PopRun will try to read the

environment variable IPUOF_VIPU_API_HOST before attempting to

contact the default V-IPU server configured when the system was installed. If

you have more than one V-IPU server or V-IPU isn’t configured on the host that

is launching PopRun, then you need to specify the correct V-IPU server name with

the --vipu-server-host option.

The default value for --vipu-server-port is 8090. Before using the default

value, PopRun will try to read the environment variable IPUOF_VIPU_API_PORT.

If the V-IPU server you want to contact is assigned a different port number,

you need to specify the correct port number with the --vipu-server-port option.

Creating, resetting, or destroying partitions might take a long time depending

on the sizes of the cluster, allocation and partition, network configuration,

CPU load, and other factors. Therefore, in rare cases, you might need to

increase V-IPU server timeout. This can be done with --vipu-server-timeout

option, and its value is in seconds.

2.8.2. PopRun and IPU over fabric settings

Poplar applications that are running on Graphcore Pods require the IPUoF library, which enables the Poplar software stack to communicate with remote IPUs. The preferred method for the IPUoF library to obtain a list of these IPUs is to query the partition information on the V-IPU server.

This means that the IPUoF library needs to know how to connect to the V-IPU server. The information about the V-IPU server is defined in a set of environment variables.

These environment variables are:

IPUOF_VIPU_API_PARTITION_ID: Name of the partition to use. This string must be defined and must not be empty.IPUOF_VIPU_API_HOST: Host name or the IP address of the V-IPU server. This string must be defined and must not be empty.IPUOF_VIPU_API_PORT: Port number of the V-IPU server. This is an optional environment variable. If provided, it must be a valid port number. The default value is 8090.IPUOF_VIPU_API_GCD_ID: Graph compilation domain ID of the partition. This value might be optional, depending on the partition configuration. See Multi-GCD partitions in the V-IPU User Guide for more information about multi-GCD partitions.

When an application is launched with PopRun, the IPUOF_VIPU_* environment

variables will be set by PopRun. IPUOF_VIPU_API_PARTITION_ID, IPUOF_VIPU_API_HOST

and IPUOF_VIPU_API_PORT, if set, will be used if values are not provided via

the command line options --vipu-partition, --vipu-server-host and --vipu-server-port.

PopRun will update the environment variables in its own process and the children

processes will inherit PopRun’s environment variables. PopRun controls the V-IPU

partition configuration, and therefore it needs to configure the IPUoF library

across all hosts and instances.

2.8.3. V-IPU partition, cluster, and allocation

If you don’t specify --vipu-partition or set IPUOF_VIPU_API_PARTITION_ID,

and there is only one partition on the V-IPU allocation, PopRun will use that partition.

If you have more than one partition, then you must specify the partition name you want to use.

To list the available partitions, you can use the following vipu command:

$ vipu list partition

See the V-IPU User Guide for more information about V-IPU and its tools.

Some applications require a certain partition configuration. For example, to

train a model with 32 replicas distributed to two program instances, a partition

with 32-IPUs that is made of two GCDs can be used. In these cases, it is

important to either create the suitable partition beforehand with the

vipu tool or ask PopRun to create one that is meeting the requirements

specified on the command line.

If you already have created a partition that is suitable for your application,

all you need to do is to specify the --vipu-partition option.

If you want PopRun to create a suitable partition for your application, you need to describe the key features of the requested partition to PopRun with the following command line options:

--vipu-partition: Create or use the specified V-IPU partition. If not set and ifIPUOF_VIPU_API_PARTITION_IDis not set, and if there is only one partition, PopRun will use that one partition.--vipu-allocation: Use the specified V-IPU allocation when creating a partition.--num-replicas: The total number of replicas.--num-ilds: The number of IPU-Link domains. If not set, determined by the V-IPU server by default.--num-instances: The number of instances. The replicas are divided evenly among the instances.

The following launch expects a 32 replica and 2 GCD partition. The default value

of --ipus-per-replica is 1. Therefore, the program will use 32-IPUs in

total. PopRun will launch two instances, and each will attach to a 16-IPU

device. The V-IPU server’s address is vipu.my_domain, and it is serving on

port 34521. It will use the partition my_partition. In this example,

the V-IPU server must have a partition with the following configuration:

Name: my_partition

Number of IPUs: 32

Number of GCDs: 2

Number of replicas: 2

Number of ILDs: 2

$ poprun --vipu-partition=my_partition \

--vipu-server-host=vipu.my_domain --vipu-server-port=34521 \

--num-replicas=32 --num-instances=2 --num-ilds=2 python3 train.py

In addition to these, you can also ask PopRun to remove or reset the partition.

--remove-partition: If you asked PopRun to create a partition, setting this option tonowill not remove the partition when PopRun exits. The default value isyes.--update-partition: If the current partition configuration doesn’t satisfy the requirements specified on the command line (for example,--num-ilds), PopRun can update the partition (remove and create again with the same name) by adjusting its new settings to match with the ones specified on the command line. Possible values areyesorno. The default value isno.--reset-partition: Reset the V-IPU partition before execution. If the option isn’t specified, non-reconfigurable partitions are reset by default, while reconfigurable partitions are not.

Note

PopRun never creates a reconfigurable partition. Any created/updated partition will be non-reconfigurable, and set up to meet with a certain set of requirements. Reusing a non-reconfigurable partition for different programs might not work as anticipated. If the program fails with host sync timeout, it is likely that the partition configuration doesn’t meet the program’s requirements.

The following launch is very similar to the one above. The main difference is

that by specifying --update-partition=yes, we are granting PopRun the

permission to remove my_partition if it exists and doesn’t fit the specified

requirements. After removal or if the partition doesn’t exist, PopRun will

create a suitable one with the same name under the V-IPU allocation called

allocation1. Likewise, by specifying --remove-partition=no, we are asking

PopRun not to remove the partition after it finishes. If this isn’t specified,

PopRun would remove the partition when it finishes because its default value is

yes.

$ poprun --vipu-partition=my_partition --vipu-allocation=allocation1 \

--vipu-server-host=vipu.my_domain --vipu-server-port=34521 \

--update-partition=yes --remove-partition=no \

--num-replicas=32 --num-instances=2 --num-ilds=2 python3 train.py

2.9. Storing and loading command line arguments

PopRun command line arguments, combined with those of the application being launched, can become long and it might be tedious to type them out before every launch.

It is possible to export a set of command line arguments using

--export-config to a file once, and load them back again using

--import-config when they are needed. It is also possible to copy this file

to other hosts that you can launch PopRun from.

For example:

$ poprun --export-config training.conf --vipu-partition=my_partition \

--vipu-allocation=allocation1 \

--vipu-server-host=vipu.my_domain --vipu-server-port=34521 \

--update-partition=yes --remove-partition=no \

--num-replicas=32 --num-instances=2 --num-ilds=2 \

--host pod[1-4] \

python3 train.py

Note

When --export-config is set, PopRun continues launching the

program.

Subsequent launches that have the same configuration can use the

training.conf file. This is the same as repeating the command line

arguments.

$ poprun --import-config training.conf

Command line arguments passed to PopRun override the stored values in the config

file during launch. That is, if you have specified both --import-config and

a command line argument, the command line argument’s value will be used.

For example, the following example overrides --num-instances value.

$ poprun --import-config training.conf --num-instances=4 \

python3 train.py --hyper-param=1

--num-instances was stored as 2 before. However, this launch will use 4

instances. Also, the training program will receive a --hyper-param=1

argument which wasn’t specified before.

If the configuration file already exists, PopRun will not overwrite the file by

default, and append the current time stamp to the file name. You can allow

overwriting existing configuration file by specifying --overwrite-config=yes

and disallow it by specifying --overwrite-config=no.

Note

Overriding an option doesn’t write the new value to the config file.

To update the stored value, you must type all arguments with their new values,

and export again using --export-config.

Note

--export-config and --import-config cannot be used at the same

time.

2.10. Troubleshooting and solutions to common problems

2.10.1. PopRun cannot find or create partition

If PopRun cannot find or create a suitable partition, you can try the following steps:

List partitions, and check whether the partition name you specified exists:

$ vipu list partition Cluster | Allocation | Partition | ILDs | GW Routing | ILD Routing | Size | GCDs | State ----------------------------------------------------------------------------------------------- example | example | part1 | 1 | DEFAULT | DNC | 16 | 1 | ACTIVE example | example | part2 | 1 | DEFAULT | DNC | 16 | 1 | ACTIVE example | example | part3 | 2 | DEFAULT | DNC | 32 | 2 | ACTIVE -----------------------------------------------------------------------------------------------If the partition name isn’t in the list, you can try to create a new one or ask PopRun to create one for you by setting the command line option

--update-partition=yes.If the partition name is in the list, it might not be compatible with the other PopRun command line options, such as

--num-ildsor--num-replicas. Again, you can use--update-partition=yesto remove an existing partition and create a compatible one with the same name.

Check connectivity to the V-IPU server

You can run V-IPU tools to check whether connection to the V-IPU server works. For example:

$ vipu list partition unable to connect to VIRM/V-IPU Controller at 127.0.0.1:8090: context deadline exceeded

This error shows that the V-IPU server isn’t running on 127.0.0.1 (localhost). Depending on your Pod configuration, V-IPU server might be installed on another host.

If you have access to the host that is supposed to run V-IPU server, you can check if the server is currently running on that host with systemd’s

systemctlcommand:

$ systemctl status vipu-serverNote

If you are using a cloud-based IPU system, then you will not have access to the host running V-IPU server and will need to contact your cloud service provider’s support for assistance.

Check that the cluster/allocation has enough IPUs

If you asked PopRun to update or create a partition but it has failed, it is possible that the V-IPU cluster or allocation has not enough available IPUs that are matching the requirements. You might need to remove some existing partitions or change the program’s requirements if possible.

2.10.2. Program cannot acquire devices

If the launched program cannot acquire a device, it usually means either of the following:

The device is in use: Launch

gc-monitorto see if the device is in use by another program. IPUs cannot be shared by different programs.The device is in error state: Launch

gc-info -l. If you cannot see a list of available devices, then one or more agents in the partition might be in error state. Try resetting the partition withvipu reset partition partition-nameby replacingpartition-namewith your partition’s name.

Note

Partition listing or manipulation through V-IPU server and subsequent device acquisition are non-atomic operations. That is, partition listing or creation, and the device acquisition are two distinct steps.

A partition could be destroyed, or its devices could be partly or completely allocated by another program, after PopRun sees it but before it acquires devices from the partition.