5. Efficient data batching

By default PopTorch will process the batch_size which you provided to

the poptorch.DataLoader.

When using the other options below, the actual number of samples used per step varies to allow the IPU(s) to process data more efficiently.

However, the effective (mini-)batch size for operations which depend on it (such as batch normalization) will not change. All that changes is how much data is actually sent for a single step.

Note

Failure to use poptorch.DataLoader may result in

accidentally changing the effective batch size for operations which depend on

it, such as batch normalization.

You can find a detailed tutorial regarding efficient data loading, batching and tuning relevant hyperparameters in PopTorch on Graphcore’s tutorials repository: PopTorch tutorial: Efficient data loading.

5.1. poptorch.DataLoader

PopTorch provides a thin wrapper around the traditional torch.utils.data.DataLoader

to abstract away some of the batch sizes calculations. If poptorch.DataLoader

is used in a distributed execution environment, it will ensure that each process uses

a different subset of the dataset.

If you set the DataLoader batch_size to more than 1 then each operation

in the model will process that number of elements at any given time.

See below for usage example.

5.2. poptorch.AsynchronousDataAccessor

To reduce host overhead you can offload the data loading process to a

separate thread by specifying mode=poptorch.DataLoaderMode.Async in the

DataLoader constructor. Internally this uses an

AsynchronousDataAccessor. Doing this allows you to reduce

the host/IPU communication overhead by using the time that the IPU is running

to load the next batch on the CPU. This means that when the IPU is finished

executing and returns to host the data will be ready for the IPU to pull in again.

1 opts = poptorch.Options()

2 opts.deviceIterations(device_iterations)

3 opts.replicationFactor(replication_factor)

4

5 loader = poptorch.DataLoader(opts,

6 ExampleDataset(shape=shape,

7 length=num_tensors),

8 batch_size=batch_size,

9 num_workers=num_workers,

10 mode=poptorch.DataLoaderMode.Async)

11

12 poptorch_model = poptorch.inferenceModel(model, opts)

13

14 for it, (data, _) in enumerate(loader):

15 out = poptorch_model(data)

Warning

AsynchronousDataAccessor makes use of the Python

multiprocessing module’s spawn start method. Consequently, the entry point of

a program that uses it must be guarded by a if __name__ == '__main__': block

to avoid endless recursion. The dataset used must also be picklable. For more

information, please see https://docs.python.org/3/library/multiprocessing.html#the-spawn-and-forkserver-start-methods.

Warning

Tensors being iterated over using an

AsynchronousDataAccessor use shared memory. You must clone

tensors at each iteration if you wish to keep their references outside of each

iteration.

Consider the following example:

predictions, labels = [], []

for data, label in dataloader:

predictions += poptorch_model(data)

labels += label

The predictions list will be correct because it’s producing a new tensor from the

inputs. However, The list labels will contain identical references. This line

would need to be replaced with the following:

labels += label.detach().clone()

5.2.1. Rebatching iterable datasets

There are two types of datasets in PyTorch : map-style datasets and iterable datasets.

As explained in the notes of PyTorch’s Data Loading Order and Sampler : for IterableDataset : “When fetching from iterable-style datasets with multi-processing, the drop_last argument drops the last non-full batch of each worker’s dataset replica.”

This means that if the number of elements is naively divided among the number of workers (which is the default behaviour) then potentially a significant number of elements will be dropped.

For example:

num_tensors = 100

num_workers = 7

batch_size = 4

per_worker_tensors = ceil(100 / num_workers) = 15

last_worker_tensors = 100 - (num_workers - 1) * per_worker_tensors = 10

num_tensors_used = batch_size * (floor(per_worker_tensors / batch_size) * (num_workers - 1) + floor(last_worker_tensors / batch_size))

= 80

This means in this particular case 20% of the dataset will never be used. But, in general the larger the number of workers and the batch size, the more data will end up being unused.

To work around this issue PopTorch has a mode=poptorch.DataLoaderMode.AsyncRebatched.

PopTorch will set the batch_size in the PyTorch Dataset and DataLoader to 1 and will instead create the batched tensors in its worker process.

The shape of the tensors returned by the DataLoader will be the same as before, but the number of used tensors from the dataset will increase to

floor(num_tensors / batch_size) * batch_size (which means all the tensors would be used in the example above).

Note

This flag is not enabled by default because the behaviour is different from the upstream DataLoader.

5.3. poptorch.Options.deviceIterations

If you set deviceIterations() to more

than 1 then you are telling PopART to execute that many batches in sequence.

Essentially, it is the equivalent of launching the IPU in a loop over that number of batches. This is efficient because that loop runs on the IPU directly.

Note that the returned output dimensions depend on poptorch.Options.anchorMode. The default value for trainingModel is Final, since you will often not need to

receive all or any of the output tensors and it is more efficient not to receive them. Therefore, only the last batch of data will be returned to the host under this setting.

You can change this behaviour by setting the value of poptorch.Options.anchorMode to All. This returns the result of each batch to the host. See poptorch.Options.anchorMode()

for more information.

1from functools import reduce

2from operator import mul

3

4import torch

5import poptorch

6

7

8class ExampleModelWithLoss(torch.nn.Module):

9 def __init__(self, data_shape, num_classes):

10 super().__init__()

11

12 self.fc = torch.nn.Linear(reduce(mul, data_shape), num_classes)

13 self.loss = torch.nn.CrossEntropyLoss()

14

15 def forward(self, x, target=None):

16 reshaped = x.reshape([x.shape[0], -1])

17 fc = self.fc(reshaped)

18

19 if target is not None:

20 return fc, self.loss(fc, target)

21 return fc

22

23

24class ExampleDataset(torch.utils.data.Dataset):

25 def __init__(self, shape, length):

26 super().__init__()

27 self._shape = shape

28 self._length = length

29

30 self._all_data = []

31 self._all_labels = []

32

33 torch.manual_seed(0)

34 for _ in range(length):

35 label = 1 if torch.rand(()) > 0.5 else 0

36 data = torch.rand(self._shape) + label

37 data[0] = -data[0]

38 self._all_data.append(data)

39 self._all_labels.append(label)

40

41 def __len__(self):

42 return self._length

43

44 def __getitem__(self, index):

45 return self._all_data[index], self._all_labels[index]

46

47

48def device_iterations_example():

49 # Set the number of samples for which activations/gradients are computed

50 # in parallel on a single IPU

51 model_batch_size = 2

52

53 # Create a poptorch.Options instance to override default options

54 opts = poptorch.Options()

55

56 # Run a 100 iteration loop on the IPU, fetching a new batch each time

57 opts.deviceIterations(100)

58

59 # Set up the DataLoader to load that much data at each iteration

60 training_data = poptorch.DataLoader(opts,

61 dataset=ExampleDataset(shape=[3, 2],

62 length=10000),

63 batch_size=model_batch_size,

64 shuffle=True,

65 drop_last=True)

66

67 model = ExampleModelWithLoss(data_shape=[3, 2], num_classes=2)

68 # Wrap the model in a PopTorch training wrapper

69 poptorch_model = poptorch.trainingModel(model, options=opts)

70

71 # Run over the training data, 100 batches at a time (specified in

72 # opts.deviceIterations())

73 for batch_number, (data, labels) in enumerate(training_data):

74 # Execute the device with a 100 iteration loop of batchsize 2.

75 # "output" and "loss" will be the respective output and loss of the

76 # final batch (the default AnchorMode).

77

78 output, loss = poptorch_model(data, labels)

79 print(f"{labels[-1]}, {output}, {loss}")

5.4. poptorch.Options.replicationFactor

replicationFactor() will replicate the model over

multiple IPUs to allow automatic data parallelism across many IPUs.

1def replication_factor_example():

2 # Set the number of samples for which activations/gradients are computed

3 # in parallel on a single IPU

4 model_batch_size = 2

5 # replication_start

6 # Create a poptorch.Options instance to override default options

7 opts = poptorch.Options()

8

9 # Run a 100 iteration loop on the IPU, fetching a new batch each time

10 opts.deviceIterations(100)

11

12 # Duplicate the model over 4 replicas.

13 opts.replicationFactor(4)

14

15 training_data = poptorch.DataLoader(opts,

16 dataset=ExampleDataset(shape=[3, 2],

17 length=100000),

18 batch_size=model_batch_size,

19 shuffle=True,

20 drop_last=True)

21

22 model = ExampleModelWithLoss(data_shape=[3, 2], num_classes=2)

23 # Wrap the model in a PopTorch training wrapper

24 poptorch_model = poptorch.trainingModel(model, options=opts)

25

26 # Run over the training data, 100 batches at a time (specified in

27 # opts.deviceIterations())

28 for batch_number, (data, labels) in enumerate(training_data):

29 # Execute the device with a 100 iteration loop of model batchsize 2

30 # across 4 IPUs (global batchsize = 2 * 4 = 8). "output" and "loss"

31 # will be the respective output and loss of the final batch of each

32 # replica (the default AnchorMode).

33 output, loss = poptorch_model(data, labels)

34 print(f"{labels[-1]}, {output}, {loss}")

5.5. poptorch.Options.Training.gradientAccumulation

You need to use

gradientAccumulation()

when training with pipelined models because the weights are shared across

pipeline batches so gradients will be both updated and used by subsequent

batches out of order.

Note gradientAccumulation()

is only needed by poptorch.PipelinedExecution.

See also poptorch.Block.

1def gradient_accumulation_example():

2 # Set the number of samples for which activations/gradients are computed

3 # in parallel on a single IPU

4 model_batch_size = 2

5 # Create a poptorch.Options instance to override default options

6 opts = poptorch.Options()

7

8 # Run a 400 iteration loop on the IPU, fetching a new batch each time

9 opts.deviceIterations(400)

10

11 # Accumulate the gradient 8 times before applying it.

12 opts.Training.gradientAccumulation(8)

13

14 training_data = poptorch.DataLoader(opts,

15 dataset=ExampleDataset(shape=[3, 2],

16 length=100000),

17 batch_size=model_batch_size,

18 shuffle=True,

19 drop_last=True)

20

21 # Wrap the model in a PopTorch training wrapper

22 poptorch_model = poptorch.trainingModel(model, options=opts)

23

24 # Run over the training data, 400 batches at a time (specified in

25 # opts.deviceIterations())

26 for batch_number, (data, labels) in enumerate(training_data):

27 # Execute the device with a 100 iteration loop of model batchsize 2

28 # with gradient updates every 8 iterations (global batchsize = 2 * 8 = 16).

29 # "output" and "loss" will be the respective output and loss of the

30 # final batch of each replica (the default AnchorMode).

31 output, loss = poptorch_model(data, labels)

32 print(f"{labels[-1]}, {output}, {loss}")

In the code example below, poptorch.Block introduced in

Section 4.4, Multi-IPU execution strategies is used to divide up

a different model into disjoint subsets of layers.

These blocks can be shared among multiple parallel execution strategies.

1class Network(nn.Module):

2 def __init__(self):

3 super(Network, self).__init__()

4 self.layer1 = nn.Linear(784, 784)

5 self.layer2 = nn.Linear(784, 784)

6 self.layer3 = nn.Linear(784, 128)

7 self.layer4 = nn.Linear(128, 10)

8 self.softmax = nn.Softmax(1)

9

10 def forward(self, x):

11 x = x.view(-1, 784)

12 with poptorch.Block("B1"):

13 x = self.layer1(x)

14 with poptorch.Block("B2"):

15 x = self.layer2(x)

16 with poptorch.Block("B3"):

17 x = self.layer3(x)

18 with poptorch.Block("B4"):

19 x = self.layer4(x)

20 x = self.softmax(x)

21 return x

22

23

24class TrainingModelWithLoss(torch.nn.Module):

25 def __init__(self, model):

26 super().__init__()

27 self.model = model

28 self.loss = torch.nn.CrossEntropyLoss()

29

30 def forward(self, args, loss_inputs=None):

31 output = self.model(args)

32 if loss_inputs is None:

33 return output

34 with poptorch.Block("B4"):

35 loss = self.loss(output, loss_inputs)

36 return output, loss

37

38

You can see the code examples of poptorch.SerialPhasedExecution,

poptorch.PipelinedExecution, and

poptorch.ShardedExecution below.

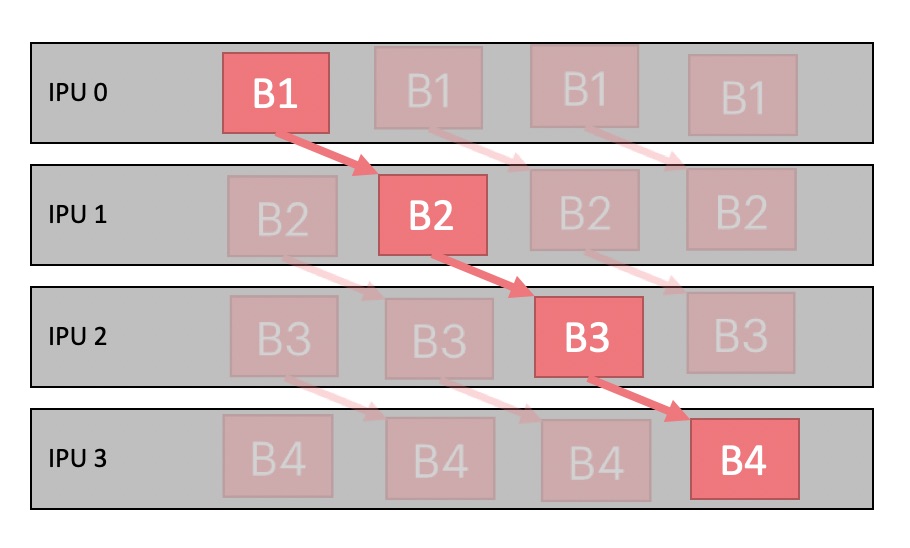

An instance of class poptorch.PipelinedExecution defines an

execution strategy that assigns layers to multiple IPUs as a pipeline. Gradient

accumulation is used to push multiple batches through the pipeline allowing

IPUs to run in parallel.

1 training_data, test_data = get_mnist_data(opts)

2 model = Network()

3 model_with_loss = TrainingModelWithLoss(model)

4 model_opts = poptorch.Options().deviceIterations(1)

5 if opts.strategy == "phased":

6 strategy = poptorch.SerialPhasedExecution("B1", "B2", "B3", "B4")

7 strategy.stage("B1").ipu(0)

8 strategy.stage("B2").ipu(0)

9 strategy.stage("B3").ipu(0)

10 strategy.stage("B4").ipu(0)

11 model_opts.setExecutionStrategy(strategy)

12 elif opts.strategy == "pipelined":

13 strategy = poptorch.PipelinedExecution("B1", "B2", "B3", "B4")

14 strategy.stage("B1").ipu(0)

15 strategy.stage("B2").ipu(1)

16 strategy.stage("B3").ipu(2)

17 strategy.stage("B4").ipu(3)

18 model_opts.setExecutionStrategy(strategy)

19 model_opts.Training.gradientAccumulation(opts.batches_per_step)

20 else:

21 strategy = poptorch.ShardedExecution("B1", "B2", "B3", "B4")

22 strategy.stage("B1").ipu(0)

23 strategy.stage("B2").ipu(0)

24 strategy.stage("B3").ipu(0)

25 strategy.stage("B4").ipu(0)

26 model_opts.setExecutionStrategy(strategy)

27

28 if opts.offload_opt:

29 model_opts.TensorLocations.setActivationLocation(

30 poptorch.TensorLocationSettings().useOnChipStorage(True))

31 model_opts.TensorLocations.setWeightLocation(

32 poptorch.TensorLocationSettings().useOnChipStorage(True))

33 model_opts.TensorLocations.setAccumulatorLocation(

34 poptorch.TensorLocationSettings().useOnChipStorage(True))

35 model_opts.TensorLocations.setOptimizerLocation(

36 poptorch.TensorLocationSettings().useOnChipStorage(False))

37

38 training_model = poptorch.trainingModel(

39 model_with_loss,

40 model_opts,

41 optimizer=optim.AdamW(model.parameters(), lr=opts.lr))

42

43 # run training, on IPU

44 train(training_model, training_data, opts)

Fig. 5.1 shows the pipeline execution for multiple batches on IPUs. There are 4 pipeline stages running on 4 IPUs respectively. Gradient accumulation enables us to keep the same number of pipeline stages, but with a wider pipeline. This helps hide the latency, which is the total time for one item to go through the whole system, as highlighted.

Fig. 5.1 Pipeline execution with gradient accumulation

5.6. poptorch.Options.Training.anchorReturnType

When you use a inferenceModel(), you will usually want to

receive all the output tensors. For this reason, PopTorch will return them

all to you by default. However, you can change this behaviour using

poptorch.Options.anchorMode().

When you use a trainingModel(), you will often not need to

receive all or any of the output tensors and it is more efficient not to

receive them. For this reason, PopTorch only returns the last batch of tensors

by default. As in the the case of inferenceModel, you can change this

behaviour using poptorch.Options.anchorMode().

If you want to monitor training using a metric such as loss or accuracy, you

may wish to take into account all tensors. To do this with minimal or no

overhead, you can use poptorch.AnchorMode.Sum. For example:

1class MulticlassPerceptron(torch.nn.Module): 2 def __init__(self, vec_length, num_classes): 3 super().__init__() 4 self.fc = torch.nn.Linear(vec_length, num_classes) 5 self.loss = torch.nn.CrossEntropyLoss() 6 7 def forward(self, x, target): 8 fc = self.fc(x) 9 10 classification = torch.argmax(fc, dim=-1) 11 accuracy = (torch.sum((classification == target).to(torch.float)) / 12 float(classification.numel())) 13 14 if self.training: 15 return self.loss(fc, target), accuracy 16 17 return classification, accuracy1opts = poptorch.Options() 2 3opts.deviceIterations(5) 4opts.Training.gradientAccumulation(10) 5opts.anchorMode(poptorch.AnchorMode.Sum) 6 7training_data = poptorch.DataLoader(opts, 8 dataset=ExampleClassDataset( 9 NUM_CLASSES, VEC_LENGTH, 2000), 10 batch_size=5, 11 shuffle=True, 12 drop_last=True) 13 14 15model = MulticlassPerceptron(VEC_LENGTH, NUM_CLASSES) 16model.train() 17 18# Wrap the model in a PopTorch training wrapper 19poptorch_model = poptorch.trainingModel(model, 20 options=opts, 21 optimizer=torch.optim.Adam( 22 model.parameters())) 23 24# Run over the training data, 5 batches at a time. 25for batch_number, (data, labels) in enumerate(training_data): 26 # Execute the device with a 5 iteration loop of batchsize 5 with 10 27 # gradient accumulations (global batchsize = 5 * 10 = 50). "loss" and 28 # "accuracy" will be the sum across all device iterations and gradient 29 # accumulations but not across the model batch size. 30 _, accuracy = poptorch_model(data, labels) 31 32 # Correct for iterations 33 # Do not divide by batch here, as this is already accounted for in the 34 # PyTorch Model. 35 accuracy /= (opts.device_iterations * opts.Training.gradient_accumulation) 36 print(f"Accuracy: {float(accuracy)*100:.2f}%")