14. Application example: MNIST

In this section, you will see how to train a simple machine learning application in PopXL. The neural network in this example has two linear layers. It will be trained with the the MNIST dataset. This dataset contains 60,000 training images and 10,000 testing images. Each input image is a handwritten digit with a resolution of 28x28 pixels.

14.1. Import the necessary libraries

First, you need to import all the required libraries.

6import argparse

7from typing import Dict, List, Tuple, Mapping

8import numpy as np

9import torch

10import torchvision

11from tqdm import tqdm

12import popxl

13import popxl.ops as ops

14import popxl.transforms as transforms

15from popxl.ops.call import CallSiteInfo

16

14.2. Prepare dataset

You can get the MNIST training and validation dataset using torch.utils.data.DataLoader.

21def get_mnist_data(

22 test_batch_size: int, batch_size: int

23) -> Tuple[torch.utils.data.DataLoader, torch.utils.data.DataLoader]:

24 """

25 Get the training and testing data for mnist.

26 """

27 training_data = torch.utils.data.DataLoader(

28 torchvision.datasets.MNIST(

29 "~/.torch/datasets",

30 train=True,

31 download=True,

32 transform=torchvision.transforms.Compose(

33 [

34 torchvision.transforms.ToTensor(),

35 # Mean and std computed on the training set.

36 torchvision.transforms.Normalize((0.1307,), (0.3081,)),

37 ]

38 ),

39 ),

40 batch_size=batch_size,

41 shuffle=True,

42 drop_last=True,

43 )

44

45 validation_data = torch.utils.data.DataLoader(

46 torchvision.datasets.MNIST(

47 "~/.torch/datasets",

48 train=False,

49 download=True,

50 transform=torchvision.transforms.Compose(

51 [

52 torchvision.transforms.ToTensor(),

53 torchvision.transforms.Normalize((0.1307,), (0.3081,)),

54 ]

55 ),

56 ),

57 batch_size=test_batch_size,

58 shuffle=True,

59 drop_last=True,

60 )

61 return training_data, validation_data

62

63

14.3. Create IR for training

The training IR is created in build_train_ir. After creating an instance of IR, operations are added

to the IR within the context of its main graph. These operations are also forced to execute in the same

order as they are added by using context manager :py:func:~popxl.in_sequence`.

235 ir = popxl.Ir()

236 ir.num_host_transfers = 1

237 ir.replication_factor = 1

238 with ir.main_graph, popxl.in_sequence():

The initial operation is to load input images and labels to x and labels, respectively

from host-to-device streams img_stream and label_stream.

241 # Host load input and labels

242 img_stream = popxl.h2d_stream(

243 [opts.batch_size, 28, 28], popxl.float32, name="input_stream"

244 )

245 x = ops.host_load(img_stream, "x")

246

247 label_stream = popxl.h2d_stream(

248 [opts.batch_size], popxl.int32, name="label_stream"

249 )

250 labels = ops.host_load(label_stream, "labels")

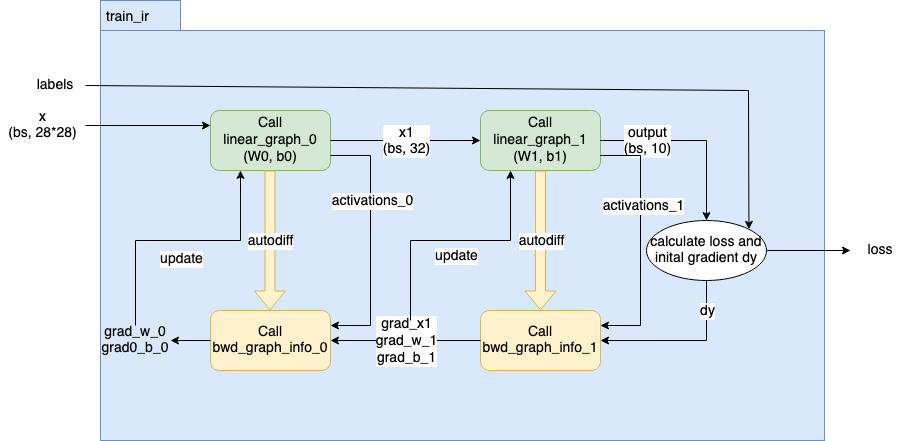

After the data is loaded from host, you can build the network, calculate the loss and gradients, and finally update the weights. This process is shown in Fig. 14.1 and will be detailed in later sections.

Fig. 14.1 Overview of how to build a training IR in PopXL

To monitor the training process, you can also stream the loss from the IPU devices to the host.

266 # Host store to get loss

267 loss_stream = popxl.d2h_stream(loss.shape, loss.dtype, name="loss_stream")

268 ops.host_store(loss_stream, loss)

14.3.1. Create network

The network has 2 linear layers. A linear layer is defined by the class Linear that

inherits from popxl.Module. We are here overriding the build method which builds the

subgraph to do the linear computation.

77class Linear(popxl.Module):

78 def __init__(self) -> None:

79 """

80 Define a linear layer in PopXL.

81 """

82 self.W: popxl.Tensor = None

83 self.b: popxl.Tensor = None

84

85 def build(

86 self, x: popxl.Tensor, out_features: int, bias: bool = True

87 ) -> Tuple[popxl.Tensor, ...]:

88 """

89 Override the `build` method to build a graph.

90 """

91 self.W = popxl.graph_input((x.shape[-1], out_features), popxl.float32, "W")

92 y = x @ self.W

93 if bias:

94 self.b = popxl.graph_input((out_features,), popxl.float32, "b")

95 y = y + self.b

96

97 y = ops.gelu(y)

98 return y

99

100

In the diagram Fig. 14.1, you can see two graphs created from the two linear

layers by using popxl.Ir.create_graph() and called by using popxl.call_with_info().

The tensors x1 and y are respectively the outputs of the first linear graph call and the

second linear graph. The weight tensors, bias tensors, output tensors, graphs, and graph callsite

infos are all returned for the next step. This forward graph of the network is created in the method

create_network_fwd_graph.

105def create_network_fwd_graph(

106 ir, x

107) -> Tuple[

108 Tuple[popxl.Tensor], Dict[str, popxl.Tensor], List[popxl.Graph], Tuple[CallSiteInfo]

109]:

110 """

111 Define the network architecture.

112

113 Args:

114 ir (popxl.Ir): The ir to create model in.

115 x (popxl.Tensor): The input tensor of this model.

116

117 Returns:

118 Tuple[Tuple[popxl.Tensor], Dict[str, popxl.Tensor], List[popxl.Graph], Tuple[CallSiteInfo]]: The info needed to calculate the gradients later

119 """

120 # Linear layer 0

121 x = x.reshape((-1, 28 * 28))

122 W0_data = np.random.normal(0, 0.02, (x.shape[-1], 32)).astype(np.float32)

123 W0 = popxl.variable(W0_data, name="W0")

124 b0_data = np.random.normal(0, 0.02, (32)).astype(np.float32)

125 b0 = popxl.variable(b0_data, name="b0")

126

127 # Linear layer 1

128 W1_data = np.random.normal(0, 0.02, (32, 10)).astype(np.float32)

129 W1 = popxl.variable(W1_data, name="W1")

130 b1_data = np.random.normal(0, 0.02, (10)).astype(np.float32)

131 b1 = popxl.variable(b1_data, name="b1")

132

133 # Create graph to call for linear layer 0

134 linear_0 = Linear()

135 linear_graph_0 = ir.create_graph(linear_0, x, out_features=32)

136

137 # Call the linear layer 0 graph

138 fwd_call_info_0 = ops.call_with_info(

139 linear_graph_0, x, inputs_dict={linear_0.W: W0, linear_0.b: b0}

140 )

141 # Output of linear layer 0

142 x1 = fwd_call_info_0.outputs[0]

143

144 # Create graph to call for linear layer 1

145 linear_1 = Linear()

146 linear_graph_1 = ir.create_graph(linear_1, x1, out_features=10)

147

148 # Call the linear layer 1 graph

149 fwd_call_info_1 = ops.call_with_info(

150 linear_graph_1, x1, inputs_dict={linear_1.W: W1, linear_1.b: b1}

151 )

152 # Output of linear layer 1

153 y = fwd_call_info_1.outputs[0]

154

155 outputs = (x1, y)

156 params = {"W0": W0, "W1": W1, "b0": b0, "b1": b1}

157 linears = [linear_0, linear_1]

158 fwd_call_infos = (fwd_call_info_0, fwd_call_info_1)

159

160 return outputs, params, linears, fwd_call_infos

161

162

14.3.2. Calculate gradients and update weights

After creating the forward pass in the training IR, we will calculate the gradients in calculate_grads

and update the weights and bias in update_weights_bias.

Calculate

lossand initial gradientsdyby usingnll_loss_with_softmax_grad().256 # Calculate loss and initial gradients 257 probs = ops.softmax(outputs[1], axis=-1) 258 loss, dy = ops.nll_loss_with_softmax_grad(probs, labels)

Construct the graph to calculate the gradients for each layer,

bwd_graph_info_0andbwd_graph_info_1by using :py:func:~popxl.transforms.autodiff` (Section 9.1, Autodiff) transformation on its forward pass graph. Note that, you only need to calculate the gradients forW0andb0in the first layer, and gradients for all the inputs,x1,W1andb1, in the second layer. In this example, you will see two different ways to useautodiffand how to use it to get the required gradients.Let’s start fromt the second layer. The

bwd_graph_info_1, returned fromautodiffof the second layer, contains the graph to calculate the gradient for the layer. The activations for this layeractivations_1is obtained from the corresponding forward graph call. After calling the gradient graph,bwd_graph_info_1.graphwithpopxl.ops.call_with_info, thegrads_1_call_infois used to get all the gradients with regard to the inputsx1,W1, andb1. The methodfwd_parent_ins_to_grad_parent_outsgives a mapping from the corresponding forward graph inputs,x1,W1, andb1, and their gradients,grad_x1,grad_w_1, andgrad_b_1. The input gradient forgrads_1_call_infoisdy.173 # Obtain graph to calculate gradients from autodiff 174 bwd_graph_info_1 = transforms.autodiff(fwd_call_infos[1].called_graph) 175 176 # Get activations for layer 1 from forward call info 177 activations_1 = bwd_graph_info_1.inputs_dict(fwd_call_infos[1]) 178 179 # Get the gradients dictionary by calling the gradient graphs with ops.call_with_info 180 grads_1_call_info = ops.call_with_info( 181 bwd_graph_info_1.graph, dy, inputs_dict=activations_1 182 ) 183 # Find the corresponding gradient w.r.t. the input, weights and bias 184 grads_1 = bwd_graph_info_1.fwd_parent_ins_to_grad_parent_outs( 185 fwd_call_infos[1], grads_1_call_info 186 ) 187 x1 = outputs[0] 188 W1 = params["W1"] 189 b1 = params["b1"] 190 grad_x_1 = grads_1[x1] 191 grad_w_1 = grads_1[W1] 192 grad_b_1 = grads_1[b1]

For the first layer, we can obtain the required gradients in a similar way. Here we will show you an alternative approach. We define the list of tensors that require gradients

grads_required=[linears[0].W, linears[0].b]inautodiff. Their gradients are returned directly from thepopxl.ops.callof the gradient graphbwd_graph_info_0.graph. The input gradient forgrads_0_call_infofis the gradients w.r.t the input of the second linear graph, the output of the first linear graph,grad_x_1.195 # Use autodiff to obtain graph that calculate gradients, specify which graph inputs need gradients 196 bwd_graph_info_0 = transforms.autodiff( 197 fwd_call_infos[0].called_graph, grads_required=[linears[0].W, linears[0].b] 198 ) 199 # Get activations for layer 0 from forward call info 200 activations_0 = bwd_graph_info_0.inputs_dict(fwd_call_infos[0]) 201 # Get the required gradients by calling the gradient graphs with ops.call 202 grad_w_0, grad_b_0 = ops.call( 203 bwd_graph_info_0.graph, grad_x_1, inputs_dict=activations_0 204 )

Update the weights and bias tensors with SGD by using

scaled_add_().212def update_weights_bias(opts, grads, params) -> None: 213 """ 214 Update weights and bias by W += - lr * grads_w, b += - lr * grads_b. 215 """ 216 for k, v in params.items(): 217 ops.scaled_add_(v, grads[k], b=-opts.lr) 218 219

14.4. Run the IR to train the model

After an IR is built taking into account the batch size args.batch_size, we can run it repeatedly until the end of the required

number of epochs. Each session is initiated by one IR as shown in the following code:

374 train_session = popxl.Session(train_ir, "ipu_model")

375 with train_session:

376 train(train_session, training_data, opts, input_streams, loss_stream)

The session is run for nb_batches times for each epoch. Each train_session run consumes a batch of input images

and labels, and produces their loss values to the host.

302def train(train_session, training_data, opts, input_streams, loss_stream) -> None:

303 nb_batches = len(training_data)

304 for epoch in range(1, opts.epochs + 1):

305 print("Epoch {0}/{1}".format(epoch, opts.epochs))

306 bar = tqdm(training_data, total=nb_batches)

307 for data, labels in bar:

308 inputs: Mapping[popxl.HostToDeviceStream, np.ndarray] = dict(

309 zip(

310 input_streams,

311 [data.squeeze().float().numpy(), labels.int().numpy()],

312 )

313 )

314

315 outputs = train_session.run(inputs)

316 loss = outputs[loss_stream]

317 bar.set_description(f"Average loss: {np.mean(loss):.4f}")

318

319

After the training session finishes running, the trained tensor values, in a mapping from tensors to their values trained_weights_data_dict,

are obtained by using train_session.get_tensors_data.

14.5. Create an IR for testing and run the IR to test the model

For testing the trained tensors, you need to create an IR for testing, test_ir, and its corresponding session,

test_session to run the test. The method write_variables_data is used to copy the trained values from

trained_weights_data_dict to the corresponding tensors in test IR, test_variables.

382 # Build the ir for testing

383 test_ir, test_input_streams, out_stream, test_variables = build_test_ir(opts)

384 test_session = popxl.Session(test_ir, "ipu_model")

385 # Get test variable values from trained weights

386 test_weights_data_dict = get_test_var_values(

387 test_variables, trained_weights_data_dict

388 )

389 # Copy trained weights to the test ir

390 test_session.write_variables_data(test_weights_data_dict)

391 with test_session:

392 test(test_session, test_data, test_input_streams, out_stream)