14. Distributed PopXL Instances

14.1. Introduction

PopXL and the underlying Poplar SDK software stack are designed to help you run multiple instances of your single PopXL program in an SPMD (single program, multiple data) manner, for example with MPI. Combined with graph replication (see Section 13, Replication), each instance will have a “local” replication factor, and overall there will be a “global” replication factor. For example, you may wish to run your model in data-parallel across 16 replicas and 4 host instances. This means each instance will have a local replication factor of 4, and the global replication factor is 16. Global replication with no local replication is also possible. Each instance can only directly communicate with the replicas assigned to it, but is aware of the global situation (for example that it covers replicas 4…7 in a 4-instance program of 16 replicas total).

The reasons you may wish to distribute your replicas over multiple host instances include:

To increase the potential bandwidth between host and IPU, by locating your instances on separate physical hosts (as each will have its own links to its IPUs).

You must have at least one process per ILD when using a partition of IPUs that spans multiple ILDs. Please see here and here for more information on ILDs.

This concept is referred to as “multiple instances”, “multiple processes”, “multiple hosts”, “distributed instances”, “distributed replicated graphs”, and other similar terms.

14.2. Primer on using PopDist and PopRun to run multi-instance applications

You can use PopRun and PopDist to run multiple instances of your program using MPI. We will give a brief introduction here, but the PopDist and PopRun: User Guide contains more information.

PopDist provides a Python library that will help you set up a PopXL program to be run on multiple instances. Specifically, it will set metadata about the multi-instance environment (like global replication factor and which replicas are on the instance) in the session options, and will select a suitable device for the instance. PopDist gets this information from environment variables that are set for each process.

PopRun is a command-line interface that wraps your application command-line, and to which you pass options configuring the multi-instance setup, for example the number of instances, the hosts to spread the instances across, and the global replication factor. It can optionally create an appropriate V-IPU partition for you with the correct number of GCDs, devices, and so on, for running a Poplar program according to the multi-instance configuration you specified. It will then use MPI to run the command-line you passed according to this configuration. It can assign instances to the hosts in a NUMA-aware manner. The environment variables read by PopDist will be set appropriately in each instance for you. This enables PopDist to setup your PopXL program correctly on each instance.

14.3. Basic Example

This example shows how to configure a program to run on multiple instances using PopDist and PopRun.

Assume you have the following program model.py:

import popxl

ir = popxl.Ir()

with ir.main_graph:

v = popxl.variable(1)

w = popxl.variable(2)

v += w

with popxl.Session(ir, 'ipu_hw') as sess:

sess.run()

print(sess.get_tensor_data(v))

To configure this program for multiple instances using PopDist, you only need to

change the Ir-construction line to popxl.Ir(replication='popdist').

This will tell PopXL to use PopDist for configuring the session options and

selecting the device.

The following poprun command runs your program model.py on 2 instances

(--num_instances) with 2 global replicas (--num_replicas). This gives

1 replica per instance. It also creates an appropriate partition for you called

popxl_test (--vipu-partition popxl_test --update-partition yes):

poprun \

--vipu-partition popxl_test --update-partition yes \

--num-replicas 2 --num-instances 2 \

python model.py

If you pass --print-topology yes, PopRun will print a diagram visualising the

topology it has created for you:

===================

| poprun topology |

|===================|

| hosts | [0] |

|-----------|-------|

| ILDs | 0 |

|-----------|-------|

| instances | 0 | 1 |

|-----------|-------|

| replicas | 0 | 1 |

-------------------

In a more realistic example, you would be doing something like data-parallel

training over all of the global replicas. On each instance, the user would

manage sending the right sub-batches of data to each instance in

popxl.Session.run(). The optimiser step would perform an

popxl.ops.replicated_all_reduce() on the gradient tensor before

applying the optimiser step. The AllReduce will automatically happen across all

the replicas across all the instances in an efficient way given the network

topology between the replicas.

14.4. Variable ReplicaGroupings and multiple instances

Note

This section assumes you are familiar with using a

ReplicaGrouping to control how a variable is initialised

across replicas in PopXL. See Section 13.2, Replica grouping for more details.

The replica grouping is defined across the global replicas, not locally on each

instance. This means that, in a multi-instance program, the default replica

grouping of a single group containing all replicas is all of the replicas across

all of the instances. This is what happened in the above example (it did not

pass a replica grouping to popxl.variable so used the default): there was 1

group containing 2 replicas, and each instance had one of those replicas.

Therefore, groups can span across instances.

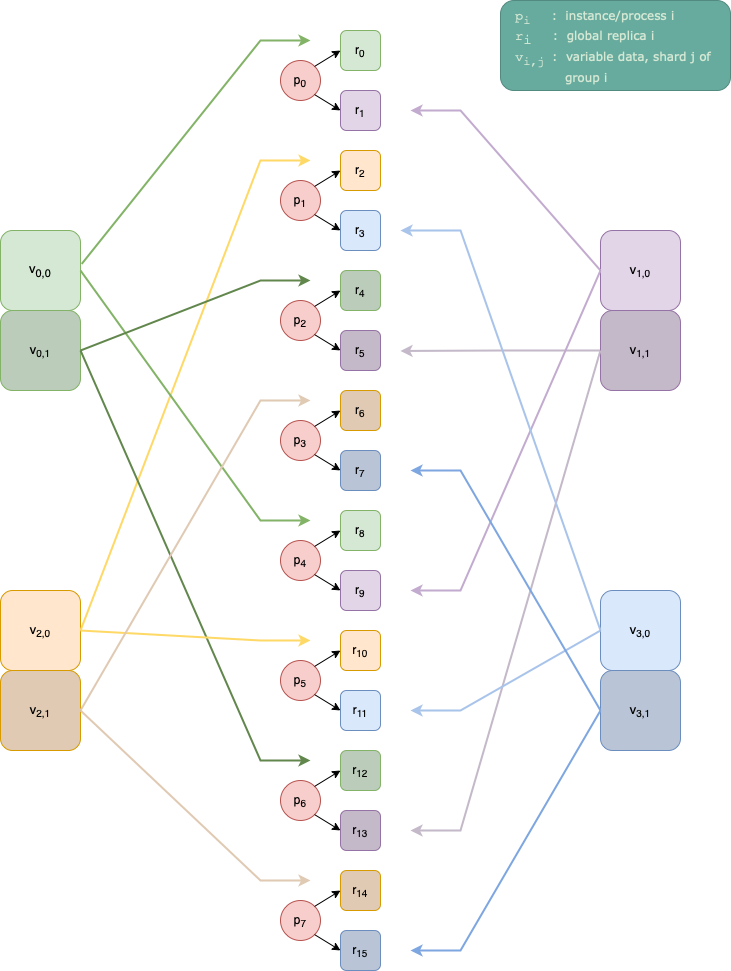

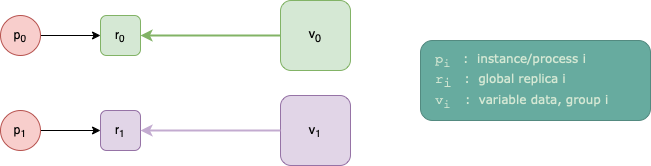

Fig. 14.1 shows a scenario for 2 instances

a global replication factor of 2, and

ReplicaGrouping(group_size=1, stride=1).

Fig. 14.1 The assignment of the replicas to instances, and the variable data from the

host to each replica. This for 2 instances, 2 replicas, and

ReplicaGrouping(group_size=1, stride=1).

As the variable has multiple groups, you must pass one set of host tensor data

for each group. For a variable with shape shape and N groups

(N > 1), this is done by passing host data of shape (N, *shape). In the

example, there are 2 groups, so the host data you pass must have an extra

dimension at the front of size 2.

For each replica, PopXL will send the data for that replica’s group to that

replica. This happens as per usual in

popxl.Session.weights_from_host(). In the above example,

weights_from_host is called implicitly when entering the Session

context. See Section 11, Session if you are unfamiliar with this.

Specifically, instance 0 will send w_data[0] to global replica 0 (which is

local replica 0 on that instance); and instance 1 will send w_data[1] to

global replica 1 (which is local replica 0 on that instance).

The code for such a program is shown in Listing 14.1.

3import numpy as np

4import popxl

5

6num_groups = 2

7nelms_per_group = 4

8host_shape = (num_groups, nelms_per_group)

9v_data = np.arange(0, num_groups * nelms_per_group).reshape(host_shape)

10

11ir = popxl.Ir(replication="popdist")

12with ir.main_graph:

13 grouping = ir.replica_grouping(group_size=1)

14 v = popxl.variable(v_data, grouping=grouping)

15

16 replica_shape = v.shape

17 assert replica_shape == (nelms_per_group,)

18

19 v += 1

20

21with popxl.Session(ir, "ipu_hw") as sess:

22 sess.run()

23print(sess.get_tensor_data(v))

Download distributed_simple_example.py

You can print session.get_tensor_data(v) and see that every instance now

has the full globally updated weight tensor. This is because, in

popxl.Session.weights_to_host() (called implicitly on context exit in

the above code), each instance will get their updated weight slices from their

local replicas, then communicate the data between them so all instances have all

the updated data.

The program above is what each instance executes in an SPMD paradigm (using

MPI, if using PopRun). However, the code is aware of the full global picture.

That is, each instance running the code is passing a replica grouping defined

over the global replica space; each passes the full global v_data even

though some of the data will not belong to the replicas of that instance; and

each returns the full global data in session.get_tensor_data(v).

14.4.1. Detail of inter-instance communication

In weights_to_host, each instance will send the data from each local replica

to the same corresponding slice in the weight’s data buffer. We are using the

default one_per_group variable retrieval mode, so we only send data from the

first replica in each group back to host (see parameter retrieval_mode of

popxl.variable()).

At this point, each instance has updated the slices of the host weight buffer only for the groups whose first replica resided on that instance. Thus, the instances need to communicate with each other to recover the fully updated weight tensor.

Let us examine the example in Listing 14.1. There are 2

groups. The first replica of group 0 is global replica 0, which is local replica

0 on instance 0. Therefore, instance 0 will fetch the updated v data from

that replica and write it to the internal host buffer for group 0 of v.

The first replica of group 1 is global replica 1, which is local replica 0 on

instance 1. Therefore, instance 1 will fetch the updated v data from that

replica and write it to the internal host buffer for group 1 of v.

At this point, instance 0 has only updated the data for group 0 locally, and instance 1 has only updated the data for group 1 locally. The instances will therefore communicate between themselves so that all instances have all the updated data. PopXL does all of this automatically.

Say we had used variable retrieval mode all_replicas (see parameter

retrieval_mode of popxl.variable()). The host buffer would

hold one weight tensor value per global replica, but each instance will have

only updated it for the replicas that reside on that instance. As with the above

case, the instances will automatically communicate to recover the full weight

tensor.

Internally, PopXL uses MPI for performing the inter-instance communication, and will automatically initialise an MPI environment for you.