2. Concepts and architecture

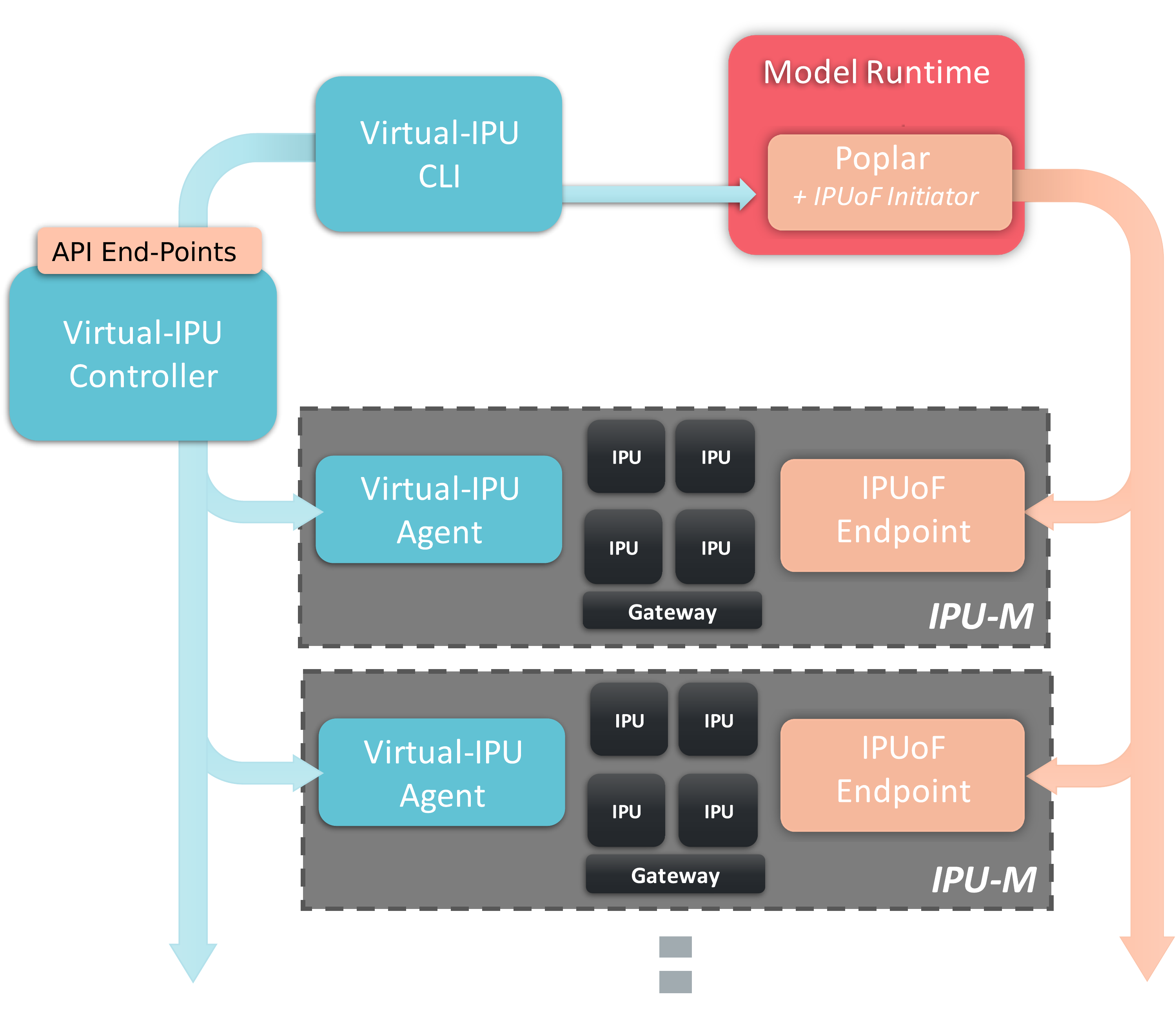

The V-IPU management software consists of the following components:

V-IPU agents: Agents reside on each IPU-Machine in an IPU system. They implement API end-points for managing the IPUs, exporting application-monitoring metrics and the server-side component of IPUoF that talks to the Poplar runtime.

V-IPU controller: The controller runs on a management node. It is responsible for managing V-IPU agents and serving API end-points. The binary distribution of the V-IPU controller is called

vipu-server.API end-points: There are two API end-points provided by the V-IPU controller: admin and user end-points. The admin end-point is to be used for deployment and administration of a V-IPU cluster, while the user end-point is used by users to request V-IPU resources. Third-party management and scheduling software, such as Kubernetes or Slurm, use the user API end-point.

V-IPU command-line interface: The command line tools are clients that use the API end-points to provide access to the functions of the V-IPU controller. The tool using the admin end-point is called

vipu-admin. The tool using the user end-point is calledvipu.This document describes the use of the

vipu-admintool.

2.1. Architecture

The architecture of the V-IPU management system is shown in Fig. 2.1.

In a typical V-IPU setup, each IPU-Machine runs a V-IPU agent that is responsible for the hardware introspection, configuration, and management of the IPU-Machine.

Fig. 2.1 V-IPU architecture

The V-IPU agents are controlled and managed by the V-IPU controller. For Pods used in “direct attach” mode, the V-IPU controller runs on one of the IPU-Machines. In switched Pods, the V-IPU controller runs on a host server, which may be one of the servers running Poplar or a separate management server.

The V-IPU controller provides end-points over gRPC, providing a rich set of administration and user APIs. These can be used to define clusters, create user partitions, and monitor and obtain diagnostics of the IPU clusters.

When a user creates a partition to run user applications, a set of IPUs is selected, depending on the workload requirements, and all the necessary IPU, IPU-Link and sync configurations are done to configure an isolated sub-cluster for the application. Moreover, the Host-Links (IPUoF end-points) are also configured so the IPUs are ready to run end-user Poplar applications.