5. OpenStack deployment

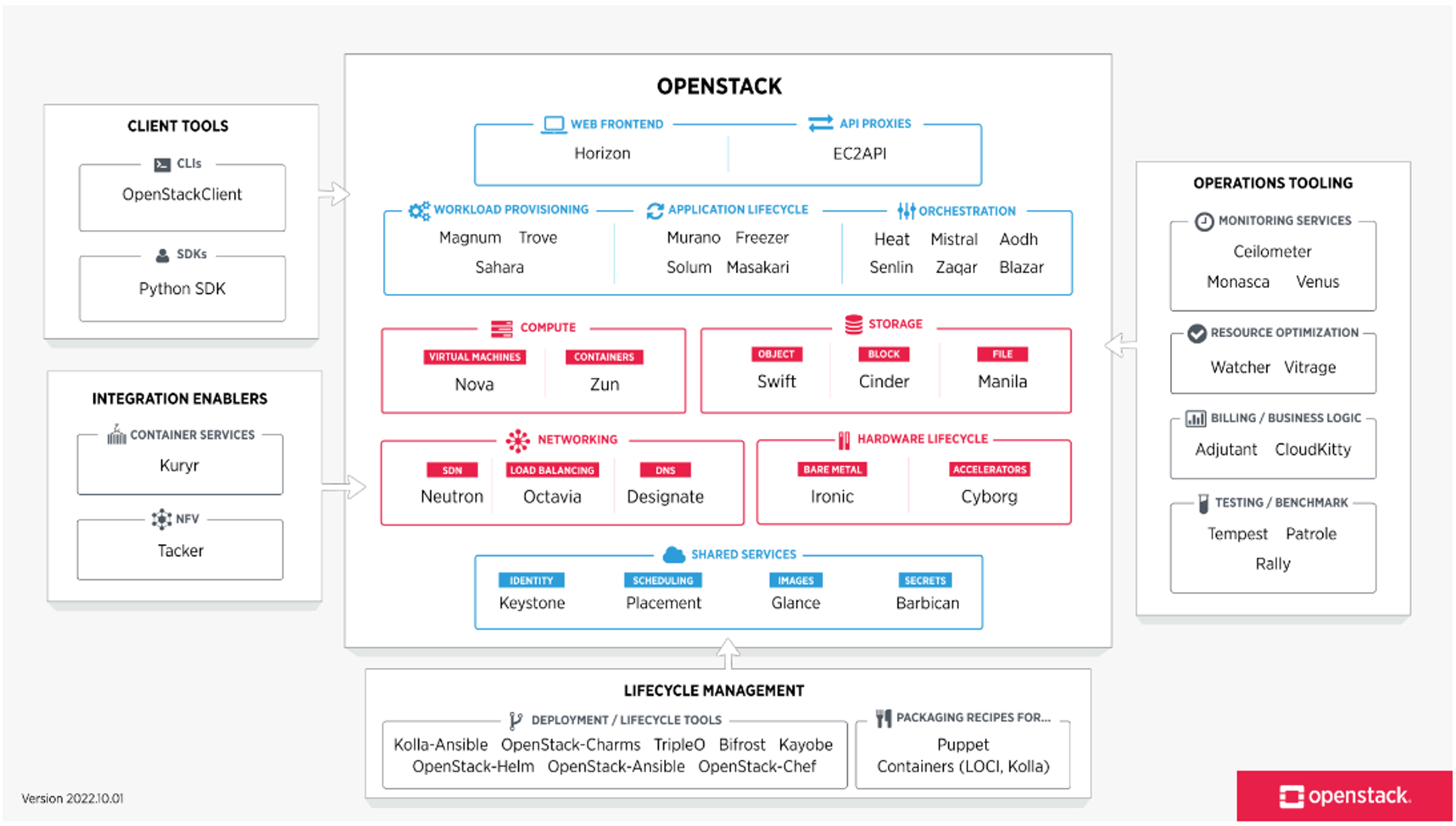

The figure below, taken from the OpenStack software page, shows an overview of OpenStack services:

Fig. 5.1 OpenStack overview

To integrate Graphcore IPU-Machines with OpenStack, the following Openstack interfaces and services are used.

5.1. Ironic

A custom Openstack Ironic driver was written for IPU-Machines which allows for them to be managed as bare-metal devices. This currently implements:

Simple DHCP control of the network interfaces

Simple Redfish power control (not yet tested)

The Ironic driver can be found in Section 9.6, IPU-Machine Ironic driver.

5.2. Nova

The following Openstack Nova items and features were used to ensure optimal communication with the IPU-Machines:

Pinned pages for user VMs. All free pages allocated to the VMs are pinned.

HugePages (1 GB) - on a 512 GB system there should be 464x 1 GB HugePages provisioned on the hypervisor for the expected workloads.

SRIOV pass-through of RNIC Virtual Functions (VF).

Dedicated physical CPUs:

nova_cpu_dedicated_setinnova.conf, with an equal number of CPUs dedicated from each NUMA zone.

hw:cpu_policy: selectdedicatedin the flavor configuration (to enable dedicated resources).

Note that it is also possible (and tested) to use over-subscribed vCPUs. Pinned pages are a requirement to allow for direct NIC connections - see Section 8.6, 100 GbE networks with RDMA over Converged Ethernet (RoCE).

5.3. Neutron (Open vSwitch)

OpenStack SDN is conventionally implemented as a set of Neutron services. Our recommendation is to use the Open vSwitch (OVS) Neutron ML2 driver.

In a virtualised OVS-based OpenStack deployment, instance network configuration is terminated in the associated hypervisor using Open vSwitch. OpenStack networking for VM instances and other software-defined infrastructure is expressed as flow rules for the Open vSwitch bridges.

Our reference design makes use of VF-LAG with OVS hardware offload. This gives maximum performance and resilience by exposing the VFs via a bonded network connection, enabling Open vSwitch to offload intensive activities onto the dedicated silicon in the Mellanox ConnectX-5 NIC.

For the hardware offloads to be enabled, OVS has to be connected to the bond directly, without using Linux network bridges. This means that the Overcloud (hypervisors/control nodes) and workload cannot communicate using the bonded 100 GbE interface, and must instead use an additional network interface. For low bandwidth applications such as Octavia the existing 1 GbE network interfaces could be used for this traffic, but for high bandwidth applications (such as sharing the Overcloud Ceph cluster via CephFS or RADOS), additional high performance NICs will have to be provisioned.