1. Overview

OpenStack can be deployed in a variety of different configurations, and Graphcore does not endorse or support any particular configuration. OpenStack is also not a pre-requisite for using Graphcore technology, however, OpenStack is often used as an underlying infrastructure in datacentres. The reference design described in this document can be used as a guideline to derive your own configuration of a Graphcore IPU solution on your existing implementation of OpenStack (or alternative cloud platform).

1.1. Goal

The aim of this document is to show a high-level example of configuring an IPU Pod (IPU-POD64) with OpenStack. This includes capturing all the details of the design and implementation of the installation: networking, deployment, administration and monitoring features.

The broader, more general details of the OpenStack deployment are not described; however, the fundamentals which it implements are covered such that it could be replicated either manually or with a different automation scheme.

1.2. Use cases

IPU Pods support multiple tenants with multiple users.

1.2.1. Use case 1:

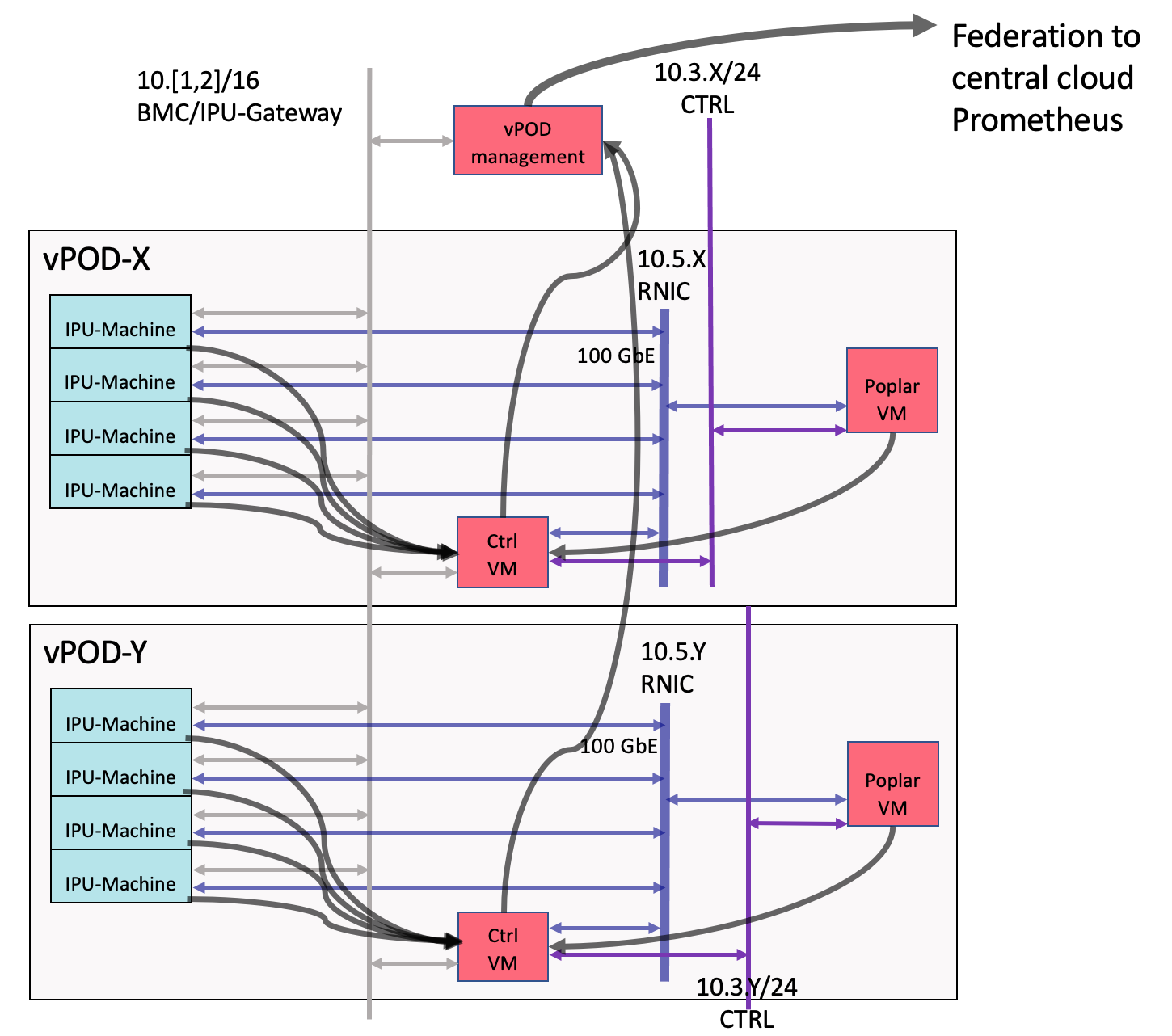

Provision of multiple user tenancies which each provide CPU and IPU compute in a discrete, secure and flexible virtual IPU Pod (vPOD). Each vPOD requires:

One or more Virtual Machines (Poplar hosts) to execute Poplar workloads

One or more IPU-Machines (IPU-M2000 or Bow-2000)

Access to IPU-Machines from Poplar hosts to execute Poplar workloads on IPUs

A secure network providing ssh access to Poplar hosts

Access to VIPU and IPU-M management services for administrators

Access to VIPU user services for Poplar hosts

Secure network storage with secure network connections (optional)

1.3. Generic cloud overview

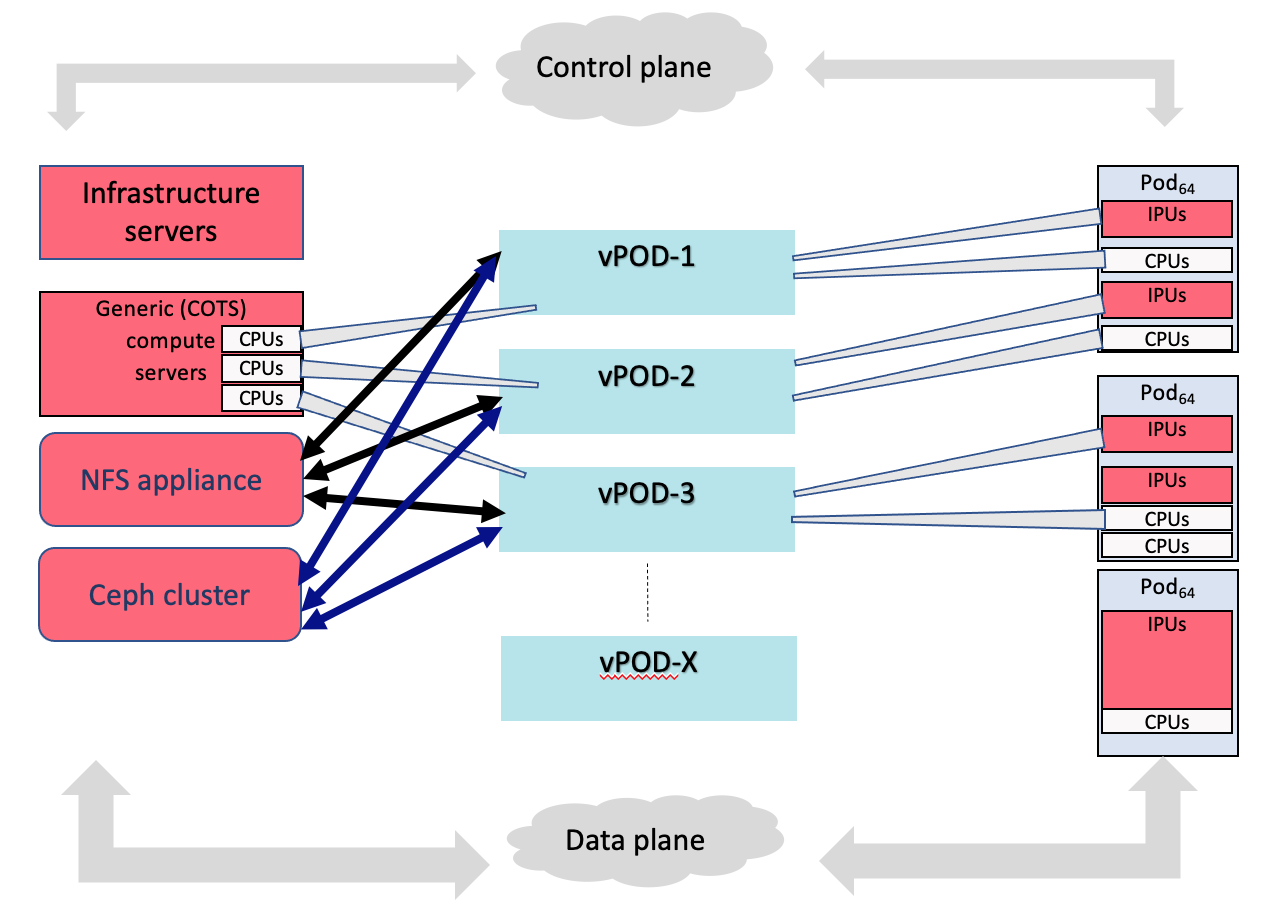

The overall architecture of components is shown below. Virtual IPU Pods are constructed with resources from several physical hardware units:

CPU from a cluster of COTS (commercial-off-the-shelf) compute servers

CPU from high-specification Poplar servers installed in the IPU Pod (IPU-POD64) clusters

IPUs from IPU-Machines in the IPU Pod (IPU-POD64) clusters

Network storage volumes from dedicated high-performance NFS appliances

Virtual disk volumes mounted from Ceph storage cluster

Control and data planes overlaid on dedicated 1 Gb (or 10 Gb) and 100 Gb network infrastructures, respectively.

Fig. 1.1 Generic cloud overview - control and data planes

1.4. Cloud physical overview

The reference IPU cloud consists of a number of high-power racks containing IPU Pods, switches, storage and other servers. Additional rack(s) contain the OpenStack infrastructure hosts and storage hardware.

A typical layout is shown in Fig. 1.2. Note that each IPU-POD64 is referred to as a logical rack in other sections of this document.

All 1G switches are connected to a dedicated data centre network (not shown) for switch management.

The data centre management network also provides internet connectivity.

Fig. 1.2 Cloud physical overview

1.5. Acronyms and abbreviations

Term |

Description |

|---|---|

IPU-Machine |

An IPU-Machine is a blade with 4 IPUs such as the IPU-M2000 or Bow-2000 |

IPU Pod |

A rack solution containing IPU-Machines, one or more host servers (also called Poplar servers), network switches and IPU Pod software |

Poplar server |

Host server that is used by end-users to run machine learning workloads on IPU-Machines |

RDMA |

Remote DMA |

RNIC |

RDMA Network Interface Controller: 100GbE interface used to communicate between Poplar servers and IPU-Machines for fast data transfers |

RoCE |

RDMA over Converged Ethernet: protocol used to transfer data between Poplar servers and IPU-Machines |

ToR |

Top of Rack: term used in combination with the ToR RDMA switch that is placed on top of the IPU-Machines |

VIRM |

V-IPU Resource Manager: V-IPU agent running on an IPU-Machine |

V-IPU Controller |

A service that is managing IPU-Machines using VIRMs |

VLAN |

Virtual LAN - subnetwork grouping devices on separate physical local area networks |

VxLAN |

Virtual Extensible LAN - network virtualization that improves scalability associated with large cloud computing deployments |

vPOD |

Virtual POD. A group of IPU-Machines with a dedicated V-IPU Controller and one or more Poplar servers. One vPOD is used by one tenant. A vPOD can have 1, 2, 4, 8, 16 or more IPU-Machines. |