7. Grouping graph replicas

This section details how to use the popart.VariableSettings class for

the purpose of grouping tensor weights across replicas.

For a detailed description of what a replica is, refer to the

Replication section in the IPU Programmer’s Guide.

7.1. Concept

When using graph replication, variables by default contain the same value on all

replicas. With the help of VariableSettings, we can assign

distinct tensor values to and retrieve tensor values from groups of

replicas, removing the limitation of assigning the same value to all replicas.

7.2. VariableSettings

The VariableSettings object is initialized with two values:

a CommGroup and a VariableRetrievalMode.

CommGroup is used to set the communication groups this tensor is divided into across replicas,

and VariableRetrievalMode lets you specify how to retrieve variables from the replicas.

The CommGroup class is composed of the CommGroupType enum,

and the size of each group. Possible values for CommGroupType are:

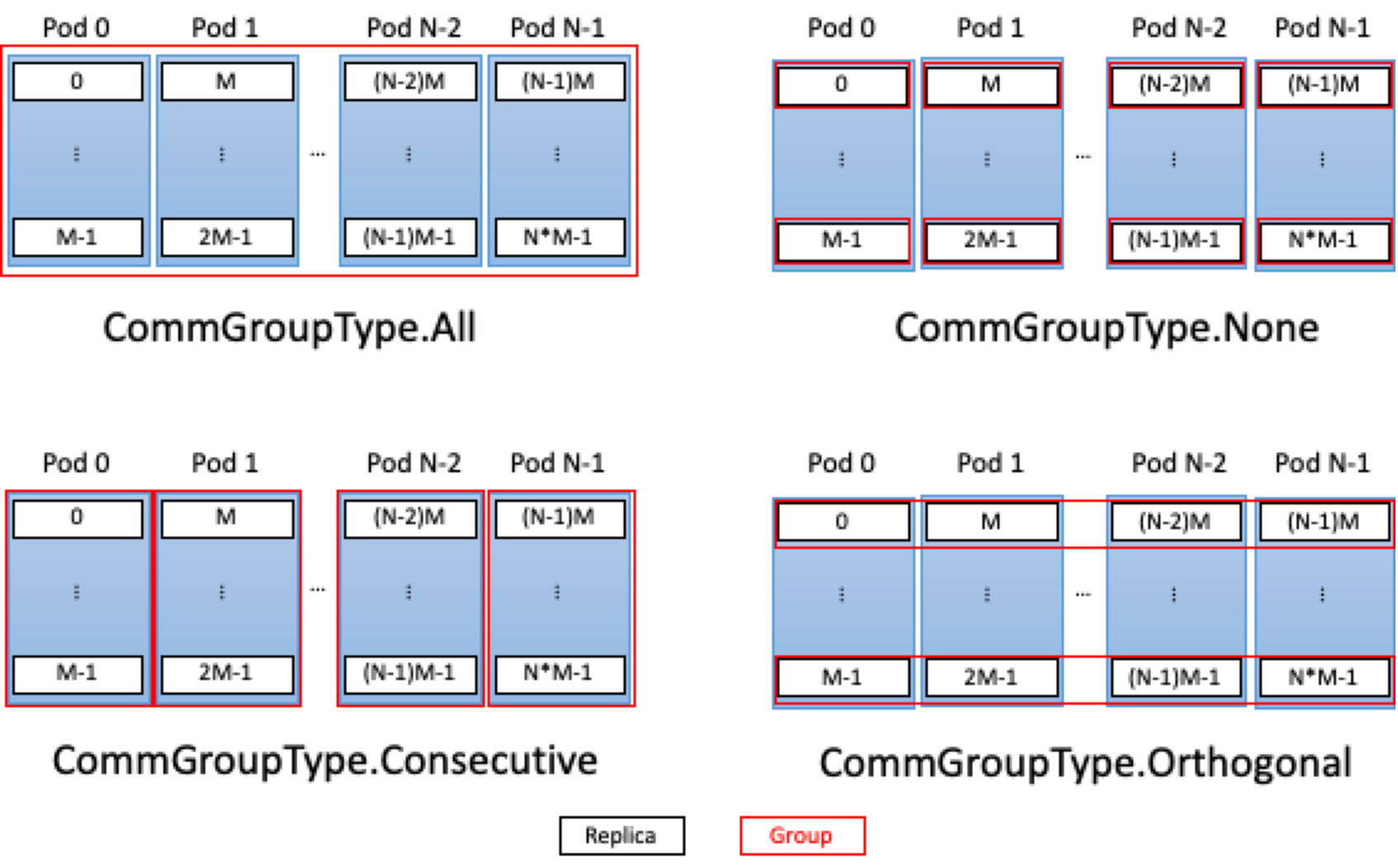

popart.CommGroupType.All: This is the default group type in which all replicas are considered to be a part of one group. This means that all replicas use the same variable values. ThisCommGroupTypeignores group size. An example of such a grouping is shown in Table 7.1 and in Fig. 7.1.Table 7.1 Replication factor 16, CommGroupType = All Group

Replicas

0

0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15

popart.CommGroupType.Consecutive: Adjacent replicas (based on replica index) are grouped together. Each group has a size equal to the sizeCommGroupis instantiated with. For example, for 16 replicas (replica index = 0 to 15) and a group size of 4, the groups are assigned as shown in Table 7.2 and in Fig. 7.1.Table 7.2 Replication factor 16, CommGroupType = Consecutive, CommGroup size = 4 Group

Replicas

0

0, 1, 2, 3

1

4, 5, 6, 7

2

8, 9, 10, 11

3

12, 13, 14, 15

popart.CommGroupType.Orthogonal: Replicas are assigned to groups with a stride defined by the number of groupsnum_groupswhere \(num_groups = \frac{number of replicas}{group size}\). For example, for 16 replicas (replica index = 0 to 15) and a group size of 4, there will be four groups and they are assigned as shown in Table 7.3 and in Fig. 7.1.Table 7.3 Replication factor 16, CommGroupType = Orthogonal, CommGroup size = 4 Group

Replicas

0

0, 4, 8, 12

1

1, 5, 9, 13

2

2, 6, 10, 14

3

3, 7, 11, 15

popart.CommGroupType.None: Each replica is in its own group. For example, for 16 replicas (replica index = 0 to 15), the groups are as shown in Table 7.4 and in Fig. 7.1.Table 7.4 Replication factor 16, CommGroupType = None Group

Replicas

0

0

1

1

2

2

…

…

14

14

15

15

Fig. 7.1 How replicas across multiple Pods are assigned to groups for the different CommGroupTypes values. There are N Pods and each has M replicas. Replicas are numbered sequentially down each first before moving across to the next Pod.

7.3. Instantiating variables with VariableSettings

The number of replicas, the replication factor, is needed to create variables with popart.VariableSettings

because the number of communication groups requiring initialization, and thus

the size of the instantiating buffer, depends on the replication factor.

popart.VariableSettings can be added to the call to the builder function

addInitializedInputTensor() when initiating your variable.

The instantiating buffers used for creating these variables have to be sized such

that they initialize each group individually. This is done by adding an outer

dimension to the instantiating buffer equal to the number of groups, and the

graph builder will handle the rest. For example, a tensor with shape [2, 3,

4] and a replication factor that results in four groups must be instantiated

with a shape of [4, 2, 3, 4], where [r, ...] instantiates the variable on replica r.

7.4. Weight input/output

When using PyWeightsIO to read the value of the weights, the instantiating buffer size must match the size of the initializing data, and if

VariableRetrievalMode is

AllReplicas, the outer dimension must

match the replication factor.

For example, using VariableSettings with a tensor of shape [2, 3, 4], a replication factor of 4

and CommGroup instantiated with Consecutive and a group size of 2, we need a buffer for

PyWeightsIO with one of the following shapes:

[2, 2, 3, 4]if we usepopart.VariableRetrievalMode.OnePerGroup.

[4, 2, 3, 4]if we usepopart.VariableRetrievalMode.AllReplicas.

The on-device buffer is populated by the session readWeights() function for training or

for inference.

Listing 7.1 shows an example of creating buffers for replicas.

# Copyright (c) 2022 Graphcore Ltd. All rights reserved.

import popart

import numpy

from popart import CommGroup, CommGroupType

from popart import VariableRetrievalMode, VariableSettings

builder = popart.Builder()

# replication factor

repl_factor = 4

# Simple base shape of variable on replica

base_shape = [3, 5]

# size of each group

group_size = 2

# The CommGroup we plan to use

communication_group = CommGroup(CommGroupType.Consecutive, group_size)

# VariableSettings to read from groups

settings_grouped = VariableSettings(

communication_group, VariableRetrievalMode.OnePerGroup

)

# VariableSettings to read from all replicas

settings_individual = VariableSettings(

communication_group, VariableRetrievalMode.AllReplicas

)

# get init buffer:

num_groups = settings_grouped.getGroupCount(repl_factor)

shape = [int(repl_factor / num_groups)] + base_shape

initializer = numpy.zeros(shape).astype(numpy.float32) # example

print(initializer.dtype)

# Creating Variables

a = builder.addInitializedInputTensor(initializer, settings_grouped)

b = builder.addInitializedInputTensor(initializer, settings_individual)

# get IO buffer shapes

shape_a = [settings_grouped.numReplicasReturningVariable(repl_factor)] + base_shape

shape_b = [settings_individual.numReplicasReturningVariable(repl_factor)] + base_shape

# get IO buffers

buffer_a = numpy.ndarray(shape_a)

buffer_b = numpy.ndarray(shape_b)

# finalize IO buffers

weightsIo = popart.PyWeightsIO({a: buffer_a, b: buffer_b})

7.5. ONNX checkpoints

ONNX is not, by default, aware of the replication factor. Therefore, the ONNX model will attempt to interpret the outermost dimension as a part of each replica. This will usually break the logic of the model.

To address this, the builder function

embedReplicationFactor() writes the replication factor

into the ONNX model as an attribute of the graph.

The builder does not need the replication factor embedded when using

resetHostWeights() for training or

for inference to

write an ONNX file into a new model.