1. Introduction

The purpose of this document is to introduce the TensorFlow framework from the perspective of developing and training models for the IPU. It assumes you have some knowledge of machine learning and TensorFlow.

For more information about the IPU architecture, abstract programming model and tools, as well as algorithmic techniques, refer to the IPU Programmer’s Guide. The contains guidelines for optimising performance in machine learning models running on the IPU.

Note

This document is for TensorFlow 1. For information on TensorFlow 2 please refer to Targeting the IPU from TensorFlow 2.

See the Getting Started” guide for your IPU system in the Graphcore documentation for information on installing the Poplar SDK and refer to the for installing TensorFlow 1 and running a simple application.

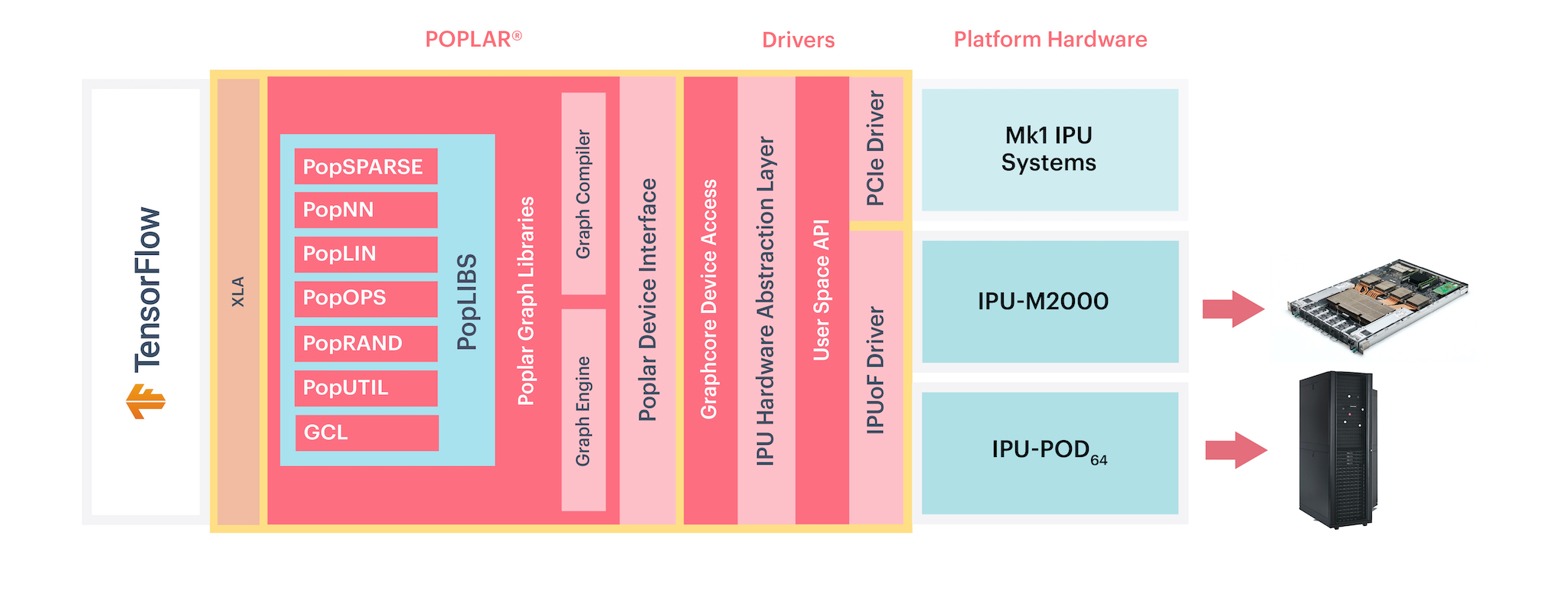

TensorFlow is a powerful graph-modelling framework that can be used for the development, training and deployment of deep learning models. In the Graphcore software stack, TensorFlow sits at the highest level of abstraction. Poplar and PopLibs provide a software interface to operations running on the IPU. XLA (Accelerated Linear Algebra) is a domain-specific compiler for linear algebra that can accelerate TensorFlow models. TensorFlow graphs are compiled into Poplar executables using our XLA backend.

Fig. 1.1 TensorFlow abstraction in relation to Poplar and the IPU

There are two main differences in the Graphcore implementation of TensorFlow:

Some machine-learning ops are optimised for the IPU hardware. For example, our custom dropout op is designed to use less memory by not storing the dropout mask between forward and backward passes.

It provides extra IPU-specific functions, such as those for selecting and configuring IPUs.

Implementing programs at the framework level is relatively independent of the underlying hardware. The process of defining a graph and its components (for example, how a convolutional layer is defined) is largely the same when targeting the IPU.

There are a small number of changes that you need to make to your TensorFlow code, when constructing and executing a model, in order to target the IPU efficiently. These include IPU-specific API configurations, model parallelism, error logging and report generation, as well as strategies for dealing with out-of-memory (OOM) issues.

These will be described in the appropriate sections of this document.

Please note that using Keras layers to build models is supported for TensorFlow

1. However, you have to define the training loop manually, because Keras API

calls such as the fit, evaluate and predict methods on Model or Sequential

are not supported.

1.1. Document overview

This document starts with a tutorial overview of TensorFlow for the IPU. This describes how to execute a graph on an IPU, how to select the IPU hardware to run on, and a simple approach to using multiple IPUs.

The next section provides information about selecting specific IPU hardware and configuring the code to run on it.

The following sections provide information on various methods related to training a model on the IPU.

The next few sections provide information on IPU-specific features.

Finally, there are reference chapters describing the API and supported operators.

You can find further information on porting a TensorFlow program to the IPU and parallelising it, in our TensorFlow technical notes.