13. Variables in Streaming Memory

When the IPU memory is insufficient, you can use Remote memory buffers to store and load data in Streaming Memory. The remote buffer is often used for the variable tensors and for the intermediate tensors. In this section, you will see how to use the following in PopXL:

remote buffers

remote variable tensors

replicated tensor sharding variables

13.1. Remote buffers

In PopXL, you can create a remote buffer in the IR by using

remote_buffer(tensor_shape, tensor_dtype, entries). The remote buffer contains a number of slots for tensors

(entries) with the same shape (tensor_shape) and data type

(tensor_dtype).

You can then store a tensor t at the index offset of a remote buffer

remote_buffer by using the operation remote_store(remote_buffer, offset, t). To load a tensor at the index offset

of the remote buffer remote_buffer, you can use

remote_load(remote_buffer, offset, name). You

can also name the returned tensor with name.

13.2. Remote variable tensors

Similarly to creating a variable tensor (Section 6.2, Variable tensors), you

can also create a variable tensor located in Streaming Memory by using remote_variable():

remote_variable(data: Union[HostTensor, float, int],

remote_buffer: RemoteBuffer,

offset: int = 0,

dtype: Optional[dtypes.dtype] = None,

name: Optional[str] = None,

downcast: bool = True)

The returned variable tensor, with value data, is put at the index

offset of the remote buffer remote_buffer. The data type and shape of

this variable tensor needs to be compatible with those of the remote buffer.

Listing 13.1 shows how to use remote buffers and remote

variable tensors. First, a remote buffer, buffer, is created with only one

entry. Then a remote variable tensor, remote_x, is created with value 1.

This variable is stored at index 0 of the buffer. The value is then loaded

from the remote buffer to the IPU variable loaded_x. The value of

loaded_x is then updated by y with value 2. The new value of

loaded_x is then stored in the same place, index 0 of buffer, as

remote_x. You can check the value of remote_x by using

session.get_tensor_data(remote_x) after you run a session. Both

loaded_x and remote_x have the value 3 in this example.

13ir = popxl.Ir()

14main = ir.main_graph

15

16with main, popxl.in_sequence():

17 x = np.array(1).astype(np.int32)

18

19 # Create a remote buffer

20 buffer = popxl.remote_buffer(x.shape, dtypes.int32, 1)

21

22 # Create a remote variable and locate it to the buffer at index 0

23 remote_x = popxl.remote_variable(x, buffer, 0)

24

25 # Load the remote variable

26 loaded_x = ops.remote_load(buffer, 0)

27

28 # Calculation on IPU to update the loaded variable

29 y = popxl.variable(2)

30 ops.var_updates.accumulate_(loaded_x, y)

31

32 # Store the updated value back to the remote buffer

33 ops.remote_store(buffer, 0, loaded_x)

34

13.3. Variable tensors for replicated tensor sharding

You can also create a variable tensor for replicated tensor sharding (RTS) that

is split in equal shards across replicas. See the

PopART User Guide

for more information.

Together with the allGather operation

replicated_all_gather(), RTS avoids storing the

same tensor for each replica. The full tensor is stored in Streaming Memory.

After the full tensor is updated on the IPU, it needs to be sharded and/or

reduced again to each replica by using the reduceScatter operation

replicated_reduce_scatter().

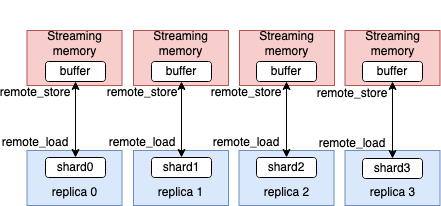

In PopXL, each shard of an RTS variable tensor is stored in its own remote buffer. To simplify the use of replication, each shard shares the same representation of its remote buffer. As shown in Fig. 13.1, each buffer has the same tensor type and tensor shape in each shard. The number of shards is the same as the number of replicas.

Fig. 13.1 An RTS variable tensor in PopXL

Note that you need to have replication enabled to create an RTS variable tensor.

You can enable replication by setting replication_factor to

> 1 (Section 12.5, Data input shape).

There are two ways to create an RTS variable tensor:

Store the full variable tensor in Streaming Memory. You can access the variable tensor through

remote_buffer.remote_replica_sharded_variable(data: Union[HostTensor, float, int], remote_buffer: RemoteBuffer, offset: int = 0, dtype: Optional[dtypes.dtype] = None, name: Optional[str] = None, downcast: bool = True) -> Variable

remote_replica_sharded_variable()returns an RTS variable tensor that has valuedataat the indexoffsetof remote bufferremote_buffer. You need to useremote_load()andremote_store()operations to load and store the variable tensor data to and from the IPU.Store the full variable tensor in Streaming Memory, along with another tensor to represent its shards. The tensor representing the shards can be used without

remote_load()andremote_store()since it is automatically loaded from or stored to Streaming Memory.replica_sharded_variable(data: Union[HostTensor, float, int], dtype: Optional[dtypes.dtype] = None, name: Optional[str] = None, downcast: bool = True) -> Tuple[Variable, Tensor]

In

replica_sharded_variable(), the variable tensor is still created with a remote buffer, as forremote_replica_sharded_variable(). The number of entries in this buffer is the number of elements in the data divided by the number of replicas. Each shard is then automatically loaded or stored according to the execution context. However, the remote buffer is hidden to provide an easier interface. You can useremote_replica_sharded_variable()to have more flexibility.

The example in the code tab Remote RTS variable

tensor shows how to update the value of a remote RTS variable tensor created

with remote_replica_sharded_variable():

The remote RTS variable tensor

remote_xis created with a remote bufferbuffer.Remote load tensor

remote_xto tensorloaded_x.Gather the shards of the tensor

loaded_xto tensorfull_x.Update the tensor

full_xin place by adding tensory.Shard tensor

full_xacross replicas to tensorupdated_shard.Remote store tensor

updated_shardto index 0, the same place as tensorremote_x, in the remote bufferbuffer.

The example in the code tab RTS variable tensor shows how

to update the RTS variable tensor created by replica_sharded_variable(). In this

example you can see the remote store and load operations are hidden.

A remote RTS variable tensor

remote_xand its shardsloaded_xare created without specifying a buffer.Then, the shards

loaded_xare updated by adding the sharded tensory.

13ir = popxl.Ir()

14ir.replication_factor = 2

15ir.num_host_transfers = 1

16

17with ir.main_graph, popxl.in_sequence():

18 # Create an RTS variable remote_x with buffer

19 x = np.array([1, 2]).astype(np.int32)

20 buffer = popxl.remote_buffer((x.size // 2,), dtypes.int32, 1)

21 remote_x = popxl.remote_replica_sharded_variable(x, buffer, 0)

22 # Load remote_x to loaded_x

23 loaded_x = ops.remote_load(buffer, 0)

24

25 # Create a variable y

26 y = popxl.variable([3, 4])

27

28 # Add y to the all gathered full x

29 full_x = ops.collectives.replicated_all_gather(loaded_x)

30 ops.var_updates.accumulate_(full_x, y)

31

32 # Scatter the updated full x to each buffer across replicas

33 updated_shard = ops.collectives.replica_sharded_slice(full_x)

34 ops.remote_store(buffer, 0, updated_shard)

35

36# Execute the ir

37with popxl.Session(ir, "ipu_model") as session:

38 outputs = session.run({})

39 # Get the updated x value

40 final_weight = session.get_tensor_data(remote_x)

41

13ir = popxl.Ir()

14ir.replication_factor = 2

15ir.num_host_transfers = 1

16

17with ir.main_graph, popxl.in_sequence():

18 # Create an RTS variable remote_x and its shards loaded_x

19 x = np.array([1, 2]).astype(np.int32)

20 remote_x, loaded_x = popxl.replica_sharded_variable(x, dtypes.int32)

21

22 # Create a variable and shard it across replicas

23 y = popxl.variable([3, 4])

24 sharded_y = ops.collectives.replica_sharded_slice(y)

25

26 # Add each shard of y to each shard of x

27 ops.var_updates.accumulate_(loaded_x, sharded_y)

28

29# Execute the ir

30with popxl.Session(ir, "ipu_model") as session:

31 outputs = session.run({})

32

33# Get the updated x value

34final_weight = session.get_tensor_data(remote_x)

35